Some good articles by Neal Ford (ThoughtWorks) on the IBM developerWorks site:

Emergent Design

Functional Thinking

Mostly Java with a bit of Groovy.

These are in line with much of my thinking these days regarding immutability, coupling, composition, etc.

Tuesday, August 30, 2011

Saturday, August 27, 2011

Why Amazon Can't Make a Kindle in the USA

Wednesday, August 17, 2011

Benchmark Surprises

We recently ran into a minor bug in Suneido - tracing temporary indexes in database queries would sometimes report queries that didn't actually have temporary indexes.

I found the problem right away. The query optimization looks at multiple different "strategies", some involve temporary indexes and some don't. The flag that indicated whether a temporary index was needed wasn't getting reset between trying different strategies. This flag wasn't used for much, so the problem hadn't been noticed.

The problem was, when I fixed the bug, a bunch of query optimization tests started failing. That surprised me because the flag wasn't used much, and on top of that, the change fixed the flag - why would that break things?

It turned out the one other place where the flag was used was in join optimization. And unintentionally, the code had ended up dependent on the incorrect flag. Ouch. It turned out surprisingly hard to figure out what was going on. The query optimization is complex and because it explores many different strategies (to find the least cost) any debugging output is large and hard to follow.

Eventually I tracked it down to the relative estimated costs of temporary indexes versus look-ups by joins (which was tied indirectly to the flag).

I'm embarrassed to say that I have never really done good benchmarking to check that the "cost" estimates in the code matched the real execution times. Most of the estimates are seat of the pants guesses, adjusted to work reasonably. It's not as bad as it sounds because there's usually one strategy that is much better than the rest, so you don't really need to be exact.

I "fixed" it (so the tests passed) by greatly reducing the cost estimate for look-ups, but I wasn't sure if that just happened to fix it, or if the cost of look-ups was actually a lot lower than I originally thought. (Actually, one test still "failed" but the new strategy looked better than the old one so I "fixed" the test.)

I took a few hours and did some quick benchmarks. The results were surprising. The optimization "cost" takes into account index key size and record size but it turned out these had very little affect on the execution time. The only significant factor (in the tests I ran) was the number of records processed. A temporary index roughly doubled the time. Joins took roughly the sum of the time of reading the two sides separately, the number of look-ups had little affect.

Note: These results are very specific to Suneido, I would not expect them to applicable elsewhere. They are also working with data that fits comfortably in memory so disk access is not a factor. That might seem unrealistic, but that's actually the normal case with our systems.

One good thing about the results is that (I think) it validates part of the database implementation. The code is careful to not copy data - the database file is mapped into memory, and that "raw" memory becomes (within a wrapper object) the in-memory record with no copying or conversion. This is likely the reason that record size (and maybe index key size) had little effect on speed. (Since I assume copying and conversion would take time proportional to record size.)

I ended up refactoring the join optimization to not use the flag at all. That left only the tracing using it, and it was easy to rewrite that to work another way. So I ended up removing the problematic flag entirely. Nice.

I'm left with the realization that my query optimization code is likely a lot more complicated than it needs to be. It looks like it would work just as well if I ignored record size and index key size and just based costs on the estimated number of records to be processed at each stage. But I think I'll leave that for another time.

I found the problem right away. The query optimization looks at multiple different "strategies", some involve temporary indexes and some don't. The flag that indicated whether a temporary index was needed wasn't getting reset between trying different strategies. This flag wasn't used for much, so the problem hadn't been noticed.

The problem was, when I fixed the bug, a bunch of query optimization tests started failing. That surprised me because the flag wasn't used much, and on top of that, the change fixed the flag - why would that break things?

It turned out the one other place where the flag was used was in join optimization. And unintentionally, the code had ended up dependent on the incorrect flag. Ouch. It turned out surprisingly hard to figure out what was going on. The query optimization is complex and because it explores many different strategies (to find the least cost) any debugging output is large and hard to follow.

Eventually I tracked it down to the relative estimated costs of temporary indexes versus look-ups by joins (which was tied indirectly to the flag).

I'm embarrassed to say that I have never really done good benchmarking to check that the "cost" estimates in the code matched the real execution times. Most of the estimates are seat of the pants guesses, adjusted to work reasonably. It's not as bad as it sounds because there's usually one strategy that is much better than the rest, so you don't really need to be exact.

I "fixed" it (so the tests passed) by greatly reducing the cost estimate for look-ups, but I wasn't sure if that just happened to fix it, or if the cost of look-ups was actually a lot lower than I originally thought. (Actually, one test still "failed" but the new strategy looked better than the old one so I "fixed" the test.)

I took a few hours and did some quick benchmarks. The results were surprising. The optimization "cost" takes into account index key size and record size but it turned out these had very little affect on the execution time. The only significant factor (in the tests I ran) was the number of records processed. A temporary index roughly doubled the time. Joins took roughly the sum of the time of reading the two sides separately, the number of look-ups had little affect.

Note: These results are very specific to Suneido, I would not expect them to applicable elsewhere. They are also working with data that fits comfortably in memory so disk access is not a factor. That might seem unrealistic, but that's actually the normal case with our systems.

One good thing about the results is that (I think) it validates part of the database implementation. The code is careful to not copy data - the database file is mapped into memory, and that "raw" memory becomes (within a wrapper object) the in-memory record with no copying or conversion. This is likely the reason that record size (and maybe index key size) had little effect on speed. (Since I assume copying and conversion would take time proportional to record size.)

I ended up refactoring the join optimization to not use the flag at all. That left only the tracing using it, and it was easy to rewrite that to work another way. So I ended up removing the problematic flag entirely. Nice.

I'm left with the realization that my query optimization code is likely a lot more complicated than it needs to be. It looks like it would work just as well if I ignored record size and index key size and just based costs on the estimated number of records to be processed at each stage. But I think I'll leave that for another time.

Sunday, August 07, 2011

Scala Again

Recently I've been re-reading Odersky's Scala book (the new edition). I looked back at my original blog posts about Scala and was surprised to see they were over two years old. Time flies!

I set up a copy of Eclipse (3.7 Indigo) with the latest Scala plugin (2.0.0beta09) so I could play around.

One of the examples in the book (and other places) is using case classes and pattern matching to simplify algebraic equations. I do some of that in Suneido (both in the language and the database query optimization) so it's somewhat familiar. Here are the classes:

I chose two optimizations - subtraction of two constant numbers, and addition of zero

This version works for a single level:

The obvious way to make it handle multiple levels is:

But this is top down, which doesn't handle cases like:

BinOp("+", Var("x"), BinOp("-", Num(2), Num(2)))

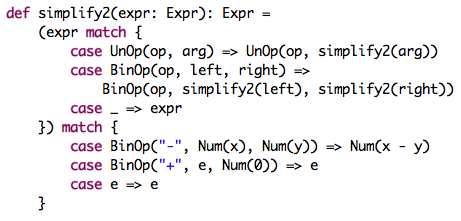

We need to operate bottom up, which means processing a node's children before itself:

BinOp("+", Var("x"), BinOp("-", Num(2), Num(2)))

One thing that bugs me about the recursive versions is that they copy every node, even if they're not changing anything. In Suneido I do something like:

But that seemed verbose and didn't fit with this Scala code. Instead, I added "alter" methods to UnOp and BinOp like:

so simplify only had to change slightly:

Of course, I ask myself if this is premature optimization. Obviously, for this simple test, it's unnecessary. On the other hand, it was part of my exploration.

I always struggle with this question of premature optimization. The problem is (as I've written about before) if you totally ignore performance issues, you can easily end up with a program that, for example, does several times as much allocation as it needs to. And that sub-optimal code won't be conveniently in one hot spot - it'll be spread throughout your code. Allocation in modern JVM's is very fast, but garbage collection and memory bandwidth and cache effects still cost.

The more I play with Scala, the more I like it.

I set up a copy of Eclipse (3.7 Indigo) with the latest Scala plugin (2.0.0beta09) so I could play around.

One of the examples in the book (and other places) is using case classes and pattern matching to simplify algebraic equations. I do some of that in Suneido (both in the language and the database query optimization) so it's somewhat familiar. Here are the classes:

I chose two optimizations - subtraction of two constant numbers, and addition of zero

This version works for a single level:

BinOp("-", Num(2), Num(2)) => Num(0)

BinOp("+", Var("x"), Num(0)) => Var("x")

The obvious way to make it handle multiple levels is:

But this is top down, which doesn't handle cases like:

BinOp("+", Var("x"), BinOp("-", Num(2), Num(2)))

=> BinOp("+",Var("x"),Num(0))

We need to operate bottom up, which means processing a node's children before itself:

=> Var("x")

One thing that bugs me about the recursive versions is that they copy every node, even if they're not changing anything. In Suneido I do something like:

arg = simplify(node.arg)

if (arg != node.arg)

node = new UnOp(node.op, arg)

But that seemed verbose and didn't fit with this Scala code. Instead, I added "alter" methods to UnOp and BinOp like:

so simplify only had to change slightly:

Of course, I ask myself if this is premature optimization. Obviously, for this simple test, it's unnecessary. On the other hand, it was part of my exploration.

I always struggle with this question of premature optimization. The problem is (as I've written about before) if you totally ignore performance issues, you can easily end up with a program that, for example, does several times as much allocation as it needs to. And that sub-optimal code won't be conveniently in one hot spot - it'll be spread throughout your code. Allocation in modern JVM's is very fast, but garbage collection and memory bandwidth and cache effects still cost.

The more I play with Scala, the more I like it.

Subscribe to:

Comments (Atom)