It started with our summer student deciding to build an "instant messenger" in Suneido. I'm not sure what prompted this, but it seemed like an interesting challenge so I let him run with it.

The trick is the "instant" part. His initial approach was to store the messages in a database table, and poll the table for changes. To make it "instant" he wanted to poll very frequently, at least once a second, and preferably more. We have about 50 users, if they are all polling every second, that's an extra 50 queries per second. I wasn't too keen on this.

I suggested we set up a separate HttpServer for the messenger, and keep the "outstanding" messages in memory, so polling didn't have to hit the database. (New messages were still written to a history table in the database, but that's not as frequent as the polling.) This took the load off the main database server, and made the requests faster.

I explained that a better way to make it "instant" was to use Comet style long polling where the server simply doesn't respond to the request until something is available. This means a lot more connections to the server since every client always has an outstanding request, which can be an issue depending on the server. But we were using jSuneido for the messenger HTTP server, and 50 connections wasn't a problem.

But the client side is also an issue. If we're going to be waiting a potentially long time for a response, the client can't be waiting "blocked", we need to make the request asynchronously. Suneido doesn't have a lot of support for aynchronous programming, but it does have a Thread function that allows you to execute code in a separate "fiber" - not a real thread, more like a coroutine. (On jSuneido "Thread" uses a real thread.)

We've never used Thread much because it has always seemed a little flaky. But it is basically sound because it's what the Suneido database server uses to handle multiple users - and that is solid. And it's also how SocketServer is implemented, and that also works well (e.g. in HttpServer)

One issue is that you want to avoid updating the GUI from a thread. That is easy to do by using Delayed with a 0 delay to process the response. Delayed works via the Windows message queue, which is processed by the main fiber. The code running inside the thread looks like:

forever

{

if "" isnt response = Http.Get(uri)

Delayed(0, ResponseProcessor(response))

}

(plus catching exceptions etc.)

However, we haven't eliminated polling, we've just moved it to the messenger HTTP server, since it must poll for new messages. (Since Suneido doesn't have any way to "wait" for something.) The code looks something like:

400.Times

{

if Suneido.MessagesAvailable

return ...

Sleep(100)

}

return ""

400 times 100ms is 40 seconds, less than the default timeout of 60 seconds. Checking once every 100 ms (1/10 of a second) is a light load on the server, most of the time it's sleeping. (Note: this wouldn't work well on a cSuneido HTTP server because Sleep would block all the other fibers.)

This has ended up working remarkably well - the messenger is "instant" with little load on the server or client.

Wednesday, October 19, 2011

Tuesday, October 18, 2011

JUnit Max

I've been using JUnit Max for the last few days. It's an Eclipse extension that automatically runs your tests every time you save a change.

Normally I'd stick to open source options (which this is not), but I figure Kent Beck deserves some support.

It's taking me a little getting used to - makes me nervous not running the tests manually. It's designed to be unobtrusive, but part of me wants more blatant feedback that my tests passed.

One of my motivations for using it is to make sure I'm running all the tests, not just the one I'm working on. I've been caught a few times where I didn't discover I broke something elsewhere till some time afterwards.

Even with a fast machine, it still takes a while for the tests to run (although I'm the only one to blame for the speed of the tests), but at least they run in the background and I can continue working.

The web site is pretty minimal and there is no documentation as far as I can tell, but then again, it's pretty self explanatory.

One of the things that prompted me to try it was listening to a podcast with Kent Beck on Software Engineering Radio (recommended).

JUnit Max has had a bit of a rocky history, pulled in 2009, relaunched in 2010. I think it's a worthwhile product and I hope it's successful.

Normally I'd stick to open source options (which this is not), but I figure Kent Beck deserves some support.

It's taking me a little getting used to - makes me nervous not running the tests manually. It's designed to be unobtrusive, but part of me wants more blatant feedback that my tests passed.

One of my motivations for using it is to make sure I'm running all the tests, not just the one I'm working on. I've been caught a few times where I didn't discover I broke something elsewhere till some time afterwards.

Even with a fast machine, it still takes a while for the tests to run (although I'm the only one to blame for the speed of the tests), but at least they run in the background and I can continue working.

The web site is pretty minimal and there is no documentation as far as I can tell, but then again, it's pretty self explanatory.

One of the things that prompted me to try it was listening to a podcast with Kent Beck on Software Engineering Radio (recommended).

JUnit Max has had a bit of a rocky history, pulled in 2009, relaunched in 2010. I think it's a worthwhile product and I hope it's successful.

Sunday, October 16, 2011

Zite: Personalized Magazine for iPad

I've been playing with this a little. I like how it analyzes your on-line presence and suggests material. And then lets you "vote" material up or down. It found quite a few interesting computer articles I hadn't seen. Of course, the last thing I need is more stuff to read!

Friday, October 07, 2011

The Grass Always Seems Greener

After tracking down the third bug to the same area of the code, I decided I had to stop adding more special cases and come up with a better solution.

The code was in the B-tree (technically B+-tree) implementation in my new append-only storage engine for jSuneido.

I'll try to explain the problem. I combine keys with a child node pointer. That leaves the question of whether a key points to the values less than it, or greater than it. I've always gone with greater than. But the problem is that when you do a lower bound binary search, the resulting position is one higher than the one you want. For example, if the tree node contains the keys 2, 4, 6, 8, the leaf containing 5 will be pointed at by the "4". But a lower bound binary search will give the position where you would insert 5, i.e. the position of the "6" key.

You can adjust for this, but it gets ugly. After the multiple bugs, I started to think that making the keys point to values less than the key would be cleaner because you wouldn't have the off by one problem.

So yesterday morning I set out to change the code. It's not a large amount of code and the changes were straightforward, but the code is fairly complex, and the "greater than" assumption was hidden in more places than I would have liked.

Eight hours later I had all my tests passing again. And it did simplify some parts of the code. But it also turned out (not surprisingly) that this approach had its own drawbacks. For example, a standard optimization is to detect when keys are being added in order (i.e. to the "end" of the B-tree) and to put only the new key in the new node, keeping the old full node intact. The way my code works, this is much easier with the "greater than" approach.

I'm sure I could have got it all worked out, but switching approaches was not the clear winner that I'd hoped.

Actual written code, with all it's messiness, always seems inferior to an imagined alternative, which is always perfectly clean. (And therefore, the grass is always greener title.) But when you implement the alternative, it often ends up just as messy as what you had before. (This relates to never rewrite from scratch)

I came up for air, ate some supper, realized I hadn't stepped foot outside the house all day, and considered my options. A glass of wine in hand, I returned to the computer. I wanted to "finish" working on it while all the details were fresh in my mind. I knew I wouldn't get a chance to work on it again for a week or so, and then I'd have to get back up to speed.

I threw out all the changes I'd made. (Except for a few additional tests I'd written.) Annoying, but at least it was only a days work.

By carefully adjusting the code I was able to keep the old approach, but remove most of the special cases that had been causing me trouble. The code is quite a bit cleaner now. I still have the off by one adjustment, but it's in a single place now.

Meanwhile, I've been reading Tokutek's blog about how COLA (cache oblivious lookahead array) style fractal trees are so much better than B-trees. That grass sure looks greener over there :-)

The code was in the B-tree (technically B+-tree) implementation in my new append-only storage engine for jSuneido.

I'll try to explain the problem. I combine keys with a child node pointer. That leaves the question of whether a key points to the values less than it, or greater than it. I've always gone with greater than. But the problem is that when you do a lower bound binary search, the resulting position is one higher than the one you want. For example, if the tree node contains the keys 2, 4, 6, 8, the leaf containing 5 will be pointed at by the "4". But a lower bound binary search will give the position where you would insert 5, i.e. the position of the "6" key.

You can adjust for this, but it gets ugly. After the multiple bugs, I started to think that making the keys point to values less than the key would be cleaner because you wouldn't have the off by one problem.

So yesterday morning I set out to change the code. It's not a large amount of code and the changes were straightforward, but the code is fairly complex, and the "greater than" assumption was hidden in more places than I would have liked.

Eight hours later I had all my tests passing again. And it did simplify some parts of the code. But it also turned out (not surprisingly) that this approach had its own drawbacks. For example, a standard optimization is to detect when keys are being added in order (i.e. to the "end" of the B-tree) and to put only the new key in the new node, keeping the old full node intact. The way my code works, this is much easier with the "greater than" approach.

I'm sure I could have got it all worked out, but switching approaches was not the clear winner that I'd hoped.

Actual written code, with all it's messiness, always seems inferior to an imagined alternative, which is always perfectly clean. (And therefore, the grass is always greener title.) But when you implement the alternative, it often ends up just as messy as what you had before. (This relates to never rewrite from scratch)

I came up for air, ate some supper, realized I hadn't stepped foot outside the house all day, and considered my options. A glass of wine in hand, I returned to the computer. I wanted to "finish" working on it while all the details were fresh in my mind. I knew I wouldn't get a chance to work on it again for a week or so, and then I'd have to get back up to speed.

I threw out all the changes I'd made. (Except for a few additional tests I'd written.) Annoying, but at least it was only a days work.

By carefully adjusting the code I was able to keep the old approach, but remove most of the special cases that had been causing me trouble. The code is quite a bit cleaner now. I still have the off by one adjustment, but it's in a single place now.

Meanwhile, I've been reading Tokutek's blog about how COLA (cache oblivious lookahead array) style fractal trees are so much better than B-trees. That grass sure looks greener over there :-)

Thursday, October 06, 2011

Farewell to Steve

I sat down with my iPad last night to catch up on my blog reading and the first thing I saw was about Steve having died. We knew it was probable, but it was still a shock. When someone is so much larger than life, you don't want to believe they are bound by the same physical laws as the rest of us.

Apple started in 1977, at a time when I was becoming increasingly absorbed by computers. My first job (only job really, other than Axon) was at a local computer store. In the service department I repaired countless Apple II's. I remember when we first saw the Lisa, and then the Mac. It's hard to communicate what a revolution it was. Nowadays the world is filled with similar amazing gadgets, but not back then.

What a long way Apple has come, and a bumpy road at times. As an entrepreneur myself at that point, I remember my shock at Steve getting kicked out of his own company. Apple struggled and Microsoft grew to dominate. Back then we could not have imagined Apple ending up bigger than Microsoft. (Any more than we imagined Microsoft overtaking IBM.) And the idea that Apple would become the most valuable company in the world would have seemed like total fantasy. Steve returning to Apple and leading it to world domination still seems like a fairy tale.

What a long way Apple has come, and a bumpy road at times. As an entrepreneur myself at that point, I remember my shock at Steve getting kicked out of his own company. Apple struggled and Microsoft grew to dominate. Back then we could not have imagined Apple ending up bigger than Microsoft. (Any more than we imagined Microsoft overtaking IBM.) And the idea that Apple would become the most valuable company in the world would have seemed like total fantasy. Steve returning to Apple and leading it to world domination still seems like a fairy tale.

Steve's abilities as a showman and salesman were alien to a geek like me. To me, what set him apart was his focus on design and user experience. Obviously, he didn't personally do all the design work. But I have to think he played a huge part in gathering and guiding his team, and shaping their culture and priorities. I can't help but wonder how things will change at Apple with Steve gone. In the short term, they will, no doubt, continue on much the same path. But in the long run, I wonder. (Just as I wonder how Axon will change when I'm not there. Not necessarily for the worse, but inevitably different.)

I don't agree with everything that Steve and Apple have done. Their closed, controlled, curated (and taxed) world is not my ideal, but it's hard to argue with what they have achieved. Apple's financial accomplishments are impressive. Even more impressive, is that they have one of the highest customer satisfaction ratings. What other company has grown so large, and yet still pleased so many people?

All in all, his life was an insanely great story.

We'll miss you Steve.

Apple started in 1977, at a time when I was becoming increasingly absorbed by computers. My first job (only job really, other than Axon) was at a local computer store. In the service department I repaired countless Apple II's. I remember when we first saw the Lisa, and then the Mac. It's hard to communicate what a revolution it was. Nowadays the world is filled with similar amazing gadgets, but not back then.

What a long way Apple has come, and a bumpy road at times. As an entrepreneur myself at that point, I remember my shock at Steve getting kicked out of his own company. Apple struggled and Microsoft grew to dominate. Back then we could not have imagined Apple ending up bigger than Microsoft. (Any more than we imagined Microsoft overtaking IBM.) And the idea that Apple would become the most valuable company in the world would have seemed like total fantasy. Steve returning to Apple and leading it to world domination still seems like a fairy tale.

What a long way Apple has come, and a bumpy road at times. As an entrepreneur myself at that point, I remember my shock at Steve getting kicked out of his own company. Apple struggled and Microsoft grew to dominate. Back then we could not have imagined Apple ending up bigger than Microsoft. (Any more than we imagined Microsoft overtaking IBM.) And the idea that Apple would become the most valuable company in the world would have seemed like total fantasy. Steve returning to Apple and leading it to world domination still seems like a fairy tale.Steve's abilities as a showman and salesman were alien to a geek like me. To me, what set him apart was his focus on design and user experience. Obviously, he didn't personally do all the design work. But I have to think he played a huge part in gathering and guiding his team, and shaping their culture and priorities. I can't help but wonder how things will change at Apple with Steve gone. In the short term, they will, no doubt, continue on much the same path. But in the long run, I wonder. (Just as I wonder how Axon will change when I'm not there. Not necessarily for the worse, but inevitably different.)

I don't agree with everything that Steve and Apple have done. Their closed, controlled, curated (and taxed) world is not my ideal, but it's hard to argue with what they have achieved. Apple's financial accomplishments are impressive. Even more impressive, is that they have one of the highest customer satisfaction ratings. What other company has grown so large, and yet still pleased so many people?

All in all, his life was an insanely great story.

We'll miss you Steve.

Tuesday, September 06, 2011

Concurrency Continued

I figured I'd better write a concurrency stress test for my DbHashTrie semi-immutable data structure, to increase my confidence that there were no problems. (Of course, a test can never prove the absence of problems.)

I write my test and it passes. But as a good cynical/skeptical tester, I tweak it so it should fail. It still passes?! I realize that I'm running in multiple threads and if an assert fails it'll just throw an exception and that thread will silently die, swallowing the exception. Oops. I add try-catch around the thread body.

Now it fails, as expected. I remove the tweak, expecting it to now succeed. It still fails! I remove the multi-threading. It still fails. Huh!? This code is in live use, if there's a bug, that's definitely not good.

After thrashing for a (thankfully short) time, I realize it was a bug in my test, not the actual code. To make it worse, I've been caught by the same thing multiple times. Some day I'll learn! When building a map-like data structure with random keys, you have to remember that you could get duplicate keys, which in most maps will overwrite the previous value. If your test checks for the original value, it'll fail since it'll get the new value.

Finally the test seems to be working properly. Except that it's not maxing out my cores. Since it's entirely in memory, with no IO, I can't see why it wouldn't. I play around with various parameters like the number of threads, size of data, and number of iterations but it still doesn't max out my cpu usage. (I use iStat Menus to show my cpu usage all the time on the menu bar so I can easily monitor what's going on.)

The only thing I can think of is the locking. I comment out the two places with synchronized and re-run the tests. Voila, all eight cores at 100%. Not normally what you want to see, but in this case it was.

Hmmm... if it wasn't maxing out the cpu, it must have been running slower. I add some timing. Yikes, the synchronized version is over 10 times slower! (45 seconds with synchronized, 3.8 seconds without.)

Of course, this is close to a worst case scenario since the test doesn't do much more than call the one synchronized method. And although the locking is per node, every access has to start at the root node. Java locks are very fast these days, but only when uncontested. And in this case the nodes at the root of the tree will be heavily contested.

If it was a single variable I could just make it volatile, but it's an array, and putting volatile on an array only makes the reference volatile, not the contents. That's where AtomicReferenceArray comes in. It had crossed my mind a few times, but the interface is quite different from an array so it would be a bit of a hassle to switch. I'd also have to write my own versions of Arrays.copyOf and System.arraycopy

I changed the code to use AtomicReferenceArray. It was a bit slower than the raw array, but not a lot (5 seconds, versus 3.8).

I also did some checking, and the JVM spec does guarantee that updates are atomic (except for long's and double's). So I think I'm safe not doing anything special for concurrent access. That means threads could read stale values. Which means there could potentially be a data race where two threads could end up loading the same node at the same time. But as far as I can figure, this should be benign since they will both get the same node (it's never modified in the memory-mapped file) and therefore it doesn't matter which one wins. I think it's equivalent to how String calculates its hashCode lazily.

The question is, do I trust this reasoning? Or do I go with the safer, but slower and uglier AtomicReferenceArray?

A little searching on the web turns up: Date-Race-Ful Lazy Initialization for Performance. Ironically, this both confirms my reasoning, and also identifies a bug in my code. I was accessing the shared variable more than once in my code, which can lead to problems due to memory access re-ordering. Easily fixed, but yet another example that it's hard to reason correctly about this stuff. (Just to hammer the point home, another article I found, Implementing hashCode, has the same bug with reading the variable multiple times without using volatile.)

The blog post also references a section in Effective Java (2nd ed) by Joshua Bloch so I dig my copy out and review it. It confirms that the single-check idiom is valid, but also recommends using double checked locking with a volatile variable instead. Even for the single check idiom, it recommends using a volatile variable, although it's not strictly necessary.

After all that, I think I'm going to go with the simplest code with the raw array, non-volatile, and unsynchronized. That's not because I trust my reasoning, but because I trust the explanation and examples of people who are much smarter than me.

I write my test and it passes. But as a good cynical/skeptical tester, I tweak it so it should fail. It still passes?! I realize that I'm running in multiple threads and if an assert fails it'll just throw an exception and that thread will silently die, swallowing the exception. Oops. I add try-catch around the thread body.

Now it fails, as expected. I remove the tweak, expecting it to now succeed. It still fails! I remove the multi-threading. It still fails. Huh!? This code is in live use, if there's a bug, that's definitely not good.

After thrashing for a (thankfully short) time, I realize it was a bug in my test, not the actual code. To make it worse, I've been caught by the same thing multiple times. Some day I'll learn! When building a map-like data structure with random keys, you have to remember that you could get duplicate keys, which in most maps will overwrite the previous value. If your test checks for the original value, it'll fail since it'll get the new value.

Finally the test seems to be working properly. Except that it's not maxing out my cores. Since it's entirely in memory, with no IO, I can't see why it wouldn't. I play around with various parameters like the number of threads, size of data, and number of iterations but it still doesn't max out my cpu usage. (I use iStat Menus to show my cpu usage all the time on the menu bar so I can easily monitor what's going on.)

The only thing I can think of is the locking. I comment out the two places with synchronized and re-run the tests. Voila, all eight cores at 100%. Not normally what you want to see, but in this case it was.

Hmmm... if it wasn't maxing out the cpu, it must have been running slower. I add some timing. Yikes, the synchronized version is over 10 times slower! (45 seconds with synchronized, 3.8 seconds without.)

Of course, this is close to a worst case scenario since the test doesn't do much more than call the one synchronized method. And although the locking is per node, every access has to start at the root node. Java locks are very fast these days, but only when uncontested. And in this case the nodes at the root of the tree will be heavily contested.

If it was a single variable I could just make it volatile, but it's an array, and putting volatile on an array only makes the reference volatile, not the contents. That's where AtomicReferenceArray comes in. It had crossed my mind a few times, but the interface is quite different from an array so it would be a bit of a hassle to switch. I'd also have to write my own versions of Arrays.copyOf and System.arraycopy

I changed the code to use AtomicReferenceArray. It was a bit slower than the raw array, but not a lot (5 seconds, versus 3.8).

I also did some checking, and the JVM spec does guarantee that updates are atomic (except for long's and double's). So I think I'm safe not doing anything special for concurrent access. That means threads could read stale values. Which means there could potentially be a data race where two threads could end up loading the same node at the same time. But as far as I can figure, this should be benign since they will both get the same node (it's never modified in the memory-mapped file) and therefore it doesn't matter which one wins. I think it's equivalent to how String calculates its hashCode lazily.

The question is, do I trust this reasoning? Or do I go with the safer, but slower and uglier AtomicReferenceArray?

A little searching on the web turns up: Date-Race-Ful Lazy Initialization for Performance. Ironically, this both confirms my reasoning, and also identifies a bug in my code. I was accessing the shared variable more than once in my code, which can lead to problems due to memory access re-ordering. Easily fixed, but yet another example that it's hard to reason correctly about this stuff. (Just to hammer the point home, another article I found, Implementing hashCode, has the same bug with reading the variable multiple times without using volatile.)

The blog post also references a section in Effective Java (2nd ed) by Joshua Bloch so I dig my copy out and review it. It confirms that the single-check idiom is valid, but also recommends using double checked locking with a volatile variable instead. Even for the single check idiom, it recommends using a volatile variable, although it's not strictly necessary.

After all that, I think I'm going to go with the simplest code with the raw array, non-volatile, and unsynchronized. That's not because I trust my reasoning, but because I trust the explanation and examples of people who are much smarter than me.

Monday, September 05, 2011

Concurrency Strikes Again

I thought it would be a relatively quick job to multiple-thread building the indexes when loading a database dump. Three days of thrashing later I more or less had it working.

But it was only about 10% faster so I ripped out my three days worth of work - not a big enough improvement to justify the extra complexity. I'm a bit puzzled by the limited improvement, but the speed is probably dominated by other factors. (e.g. reading and writing the files.)

The story doesn't end there though. Along the way I was seeing some very puzzling debugging output. So after abandoning my initial goal I started to dig into what was going on.

The bad news is that I found some serious concurrency flaws in my transaction handling. The good news is that I fixed them. Obviously, I need more/better tests.

Immutable data structures are great for concurrency, but they can have significant overhead compared to regular mutable ones. So, to try to get the best of both worlds, I have some semi-immutable data structures. The idea is that they are immutable when shared between threads, but mutable when confined to one thread. As always, the devil is in the details.

I found that I had inadvertently ended up sharing when mutable, leading to some ugly bugs. The data structure becomes immutable after you persist it to disk. But I had updated the master copy before saving instead of after. A small mistake with big consequences. To make sure I don't make a similar problem in the future I added asserts in relevant places to ensure that it was immutable when it should be.

Another potential issue was that even when immutable, it's only "effectively" immutable since the data structure is loaded from disk lazily. I had synchronized the updates but not the reads. That might actually be ok in this case, as long as writing a reference (pointer) is atomic. But I decided it was better to synchronize in a few more places than depend on something I wasn't sure about.

But it was only about 10% faster so I ripped out my three days worth of work - not a big enough improvement to justify the extra complexity. I'm a bit puzzled by the limited improvement, but the speed is probably dominated by other factors. (e.g. reading and writing the files.)

The story doesn't end there though. Along the way I was seeing some very puzzling debugging output. So after abandoning my initial goal I started to dig into what was going on.

The bad news is that I found some serious concurrency flaws in my transaction handling. The good news is that I fixed them. Obviously, I need more/better tests.

Immutable data structures are great for concurrency, but they can have significant overhead compared to regular mutable ones. So, to try to get the best of both worlds, I have some semi-immutable data structures. The idea is that they are immutable when shared between threads, but mutable when confined to one thread. As always, the devil is in the details.

I found that I had inadvertently ended up sharing when mutable, leading to some ugly bugs. The data structure becomes immutable after you persist it to disk. But I had updated the master copy before saving instead of after. A small mistake with big consequences. To make sure I don't make a similar problem in the future I added asserts in relevant places to ensure that it was immutable when it should be.

Another potential issue was that even when immutable, it's only "effectively" immutable since the data structure is loaded from disk lazily. I had synchronized the updates but not the reads. That might actually be ok in this case, as long as writing a reference (pointer) is atomic. But I decided it was better to synchronize in a few more places than depend on something I wasn't sure about.

Friday, September 02, 2011

Append-Only Database Performance

I've been working on the append-only storage engine for jSuneido and most recently dumping and loading. I was flipping back and forth between storage engines and it seemed like the new one was faster. I decided to time it and sure enough, with the new append-only storage engine, loading a database was close to twice as fast - nice!

I've been working on the append-only storage engine for jSuneido and most recently dumping and loading. I was flipping back and forth between storage engines and it seemed like the new one was faster. I decided to time it and sure enough, with the new append-only storage engine, loading a database was close to twice as fast - nice!Note: This was not any kind of scientific benchmark. It was a relatively small database (130mb) and only loading. But still, a promising early result.

This is also still single-threaded. I've been itching to try using multiple threads to build indexes. Each index is relatively independent so it should be feasible, but I'm currently using an "exclusive" transaction so it's a little tricky. This should speed up loading even more.

Tuesday, August 30, 2011

Emergent Design & Functional Thinking

Some good articles by Neal Ford (ThoughtWorks) on the IBM developerWorks site:

Emergent Design

Functional Thinking

Mostly Java with a bit of Groovy.

These are in line with much of my thinking these days regarding immutability, coupling, composition, etc.

Emergent Design

Functional Thinking

Mostly Java with a bit of Groovy.

These are in line with much of my thinking these days regarding immutability, coupling, composition, etc.

Saturday, August 27, 2011

Why Amazon Can't Make a Kindle in the USA

Wednesday, August 17, 2011

Benchmark Surprises

We recently ran into a minor bug in Suneido - tracing temporary indexes in database queries would sometimes report queries that didn't actually have temporary indexes.

I found the problem right away. The query optimization looks at multiple different "strategies", some involve temporary indexes and some don't. The flag that indicated whether a temporary index was needed wasn't getting reset between trying different strategies. This flag wasn't used for much, so the problem hadn't been noticed.

The problem was, when I fixed the bug, a bunch of query optimization tests started failing. That surprised me because the flag wasn't used much, and on top of that, the change fixed the flag - why would that break things?

It turned out the one other place where the flag was used was in join optimization. And unintentionally, the code had ended up dependent on the incorrect flag. Ouch. It turned out surprisingly hard to figure out what was going on. The query optimization is complex and because it explores many different strategies (to find the least cost) any debugging output is large and hard to follow.

Eventually I tracked it down to the relative estimated costs of temporary indexes versus look-ups by joins (which was tied indirectly to the flag).

I'm embarrassed to say that I have never really done good benchmarking to check that the "cost" estimates in the code matched the real execution times. Most of the estimates are seat of the pants guesses, adjusted to work reasonably. It's not as bad as it sounds because there's usually one strategy that is much better than the rest, so you don't really need to be exact.

I "fixed" it (so the tests passed) by greatly reducing the cost estimate for look-ups, but I wasn't sure if that just happened to fix it, or if the cost of look-ups was actually a lot lower than I originally thought. (Actually, one test still "failed" but the new strategy looked better than the old one so I "fixed" the test.)

I took a few hours and did some quick benchmarks. The results were surprising. The optimization "cost" takes into account index key size and record size but it turned out these had very little affect on the execution time. The only significant factor (in the tests I ran) was the number of records processed. A temporary index roughly doubled the time. Joins took roughly the sum of the time of reading the two sides separately, the number of look-ups had little affect.

Note: These results are very specific to Suneido, I would not expect them to applicable elsewhere. They are also working with data that fits comfortably in memory so disk access is not a factor. That might seem unrealistic, but that's actually the normal case with our systems.

One good thing about the results is that (I think) it validates part of the database implementation. The code is careful to not copy data - the database file is mapped into memory, and that "raw" memory becomes (within a wrapper object) the in-memory record with no copying or conversion. This is likely the reason that record size (and maybe index key size) had little effect on speed. (Since I assume copying and conversion would take time proportional to record size.)

I ended up refactoring the join optimization to not use the flag at all. That left only the tracing using it, and it was easy to rewrite that to work another way. So I ended up removing the problematic flag entirely. Nice.

I'm left with the realization that my query optimization code is likely a lot more complicated than it needs to be. It looks like it would work just as well if I ignored record size and index key size and just based costs on the estimated number of records to be processed at each stage. But I think I'll leave that for another time.

I found the problem right away. The query optimization looks at multiple different "strategies", some involve temporary indexes and some don't. The flag that indicated whether a temporary index was needed wasn't getting reset between trying different strategies. This flag wasn't used for much, so the problem hadn't been noticed.

The problem was, when I fixed the bug, a bunch of query optimization tests started failing. That surprised me because the flag wasn't used much, and on top of that, the change fixed the flag - why would that break things?

It turned out the one other place where the flag was used was in join optimization. And unintentionally, the code had ended up dependent on the incorrect flag. Ouch. It turned out surprisingly hard to figure out what was going on. The query optimization is complex and because it explores many different strategies (to find the least cost) any debugging output is large and hard to follow.

Eventually I tracked it down to the relative estimated costs of temporary indexes versus look-ups by joins (which was tied indirectly to the flag).

I'm embarrassed to say that I have never really done good benchmarking to check that the "cost" estimates in the code matched the real execution times. Most of the estimates are seat of the pants guesses, adjusted to work reasonably. It's not as bad as it sounds because there's usually one strategy that is much better than the rest, so you don't really need to be exact.

I "fixed" it (so the tests passed) by greatly reducing the cost estimate for look-ups, but I wasn't sure if that just happened to fix it, or if the cost of look-ups was actually a lot lower than I originally thought. (Actually, one test still "failed" but the new strategy looked better than the old one so I "fixed" the test.)

I took a few hours and did some quick benchmarks. The results were surprising. The optimization "cost" takes into account index key size and record size but it turned out these had very little affect on the execution time. The only significant factor (in the tests I ran) was the number of records processed. A temporary index roughly doubled the time. Joins took roughly the sum of the time of reading the two sides separately, the number of look-ups had little affect.

Note: These results are very specific to Suneido, I would not expect them to applicable elsewhere. They are also working with data that fits comfortably in memory so disk access is not a factor. That might seem unrealistic, but that's actually the normal case with our systems.

One good thing about the results is that (I think) it validates part of the database implementation. The code is careful to not copy data - the database file is mapped into memory, and that "raw" memory becomes (within a wrapper object) the in-memory record with no copying or conversion. This is likely the reason that record size (and maybe index key size) had little effect on speed. (Since I assume copying and conversion would take time proportional to record size.)

I ended up refactoring the join optimization to not use the flag at all. That left only the tracing using it, and it was easy to rewrite that to work another way. So I ended up removing the problematic flag entirely. Nice.

I'm left with the realization that my query optimization code is likely a lot more complicated than it needs to be. It looks like it would work just as well if I ignored record size and index key size and just based costs on the estimated number of records to be processed at each stage. But I think I'll leave that for another time.

Sunday, August 07, 2011

Scala Again

Recently I've been re-reading Odersky's Scala book (the new edition). I looked back at my original blog posts about Scala and was surprised to see they were over two years old. Time flies!

I set up a copy of Eclipse (3.7 Indigo) with the latest Scala plugin (2.0.0beta09) so I could play around.

One of the examples in the book (and other places) is using case classes and pattern matching to simplify algebraic equations. I do some of that in Suneido (both in the language and the database query optimization) so it's somewhat familiar. Here are the classes:

I chose two optimizations - subtraction of two constant numbers, and addition of zero

This version works for a single level:

The obvious way to make it handle multiple levels is:

But this is top down, which doesn't handle cases like:

BinOp("+", Var("x"), BinOp("-", Num(2), Num(2)))

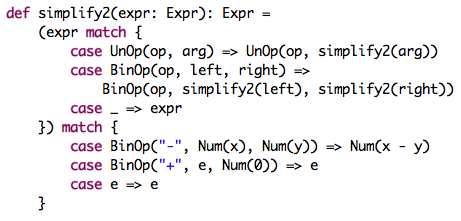

We need to operate bottom up, which means processing a node's children before itself:

BinOp("+", Var("x"), BinOp("-", Num(2), Num(2)))

One thing that bugs me about the recursive versions is that they copy every node, even if they're not changing anything. In Suneido I do something like:

But that seemed verbose and didn't fit with this Scala code. Instead, I added "alter" methods to UnOp and BinOp like:

so simplify only had to change slightly:

Of course, I ask myself if this is premature optimization. Obviously, for this simple test, it's unnecessary. On the other hand, it was part of my exploration.

I always struggle with this question of premature optimization. The problem is (as I've written about before) if you totally ignore performance issues, you can easily end up with a program that, for example, does several times as much allocation as it needs to. And that sub-optimal code won't be conveniently in one hot spot - it'll be spread throughout your code. Allocation in modern JVM's is very fast, but garbage collection and memory bandwidth and cache effects still cost.

The more I play with Scala, the more I like it.

I set up a copy of Eclipse (3.7 Indigo) with the latest Scala plugin (2.0.0beta09) so I could play around.

One of the examples in the book (and other places) is using case classes and pattern matching to simplify algebraic equations. I do some of that in Suneido (both in the language and the database query optimization) so it's somewhat familiar. Here are the classes:

I chose two optimizations - subtraction of two constant numbers, and addition of zero

This version works for a single level:

BinOp("-", Num(2), Num(2)) => Num(0)

BinOp("+", Var("x"), Num(0)) => Var("x")

The obvious way to make it handle multiple levels is:

But this is top down, which doesn't handle cases like:

BinOp("+", Var("x"), BinOp("-", Num(2), Num(2)))

=> BinOp("+",Var("x"),Num(0))

We need to operate bottom up, which means processing a node's children before itself:

=> Var("x")

One thing that bugs me about the recursive versions is that they copy every node, even if they're not changing anything. In Suneido I do something like:

arg = simplify(node.arg)

if (arg != node.arg)

node = new UnOp(node.op, arg)

But that seemed verbose and didn't fit with this Scala code. Instead, I added "alter" methods to UnOp and BinOp like:

so simplify only had to change slightly:

Of course, I ask myself if this is premature optimization. Obviously, for this simple test, it's unnecessary. On the other hand, it was part of my exploration.

I always struggle with this question of premature optimization. The problem is (as I've written about before) if you totally ignore performance issues, you can easily end up with a program that, for example, does several times as much allocation as it needs to. And that sub-optimal code won't be conveniently in one hot spot - it'll be spread throughout your code. Allocation in modern JVM's is very fast, but garbage collection and memory bandwidth and cache effects still cost.

The more I play with Scala, the more I like it.

Saturday, July 30, 2011

Append-Only Database Progress

This won't mean much to anyone but me, but it's the culmination of several weeks of refactoring. This test runs a simple query using both the current storage engine and the new append-only storage engine, by simply choosing which DatabasePackage to use. All the query parsing, optimization, and execution is common, just the low level storage engine is different. While the query is simple, a lot of things have to work at the lower levels to make it function, so it's a good milestone.

This is using jUnit's ability to run tests with multiple sets of parameters.

This is using jUnit's ability to run tests with multiple sets of parameters.

Thursday, July 21, 2011

Upgrading to Lion

As much as I know better than to rush to new technology, I couldn't resist upgrading to Lion right away.

I wasn't really paying attention, but it took about an hour to download the 4gb update from the App Store.

I knew I had four machines to update (my iMac and MacBook, and Shelley's iMac and MacBook) so I looked up online how to update multiple machines from a single download.

The trick is that there is a disk image inside the download that you can use to make a bootable DVD or USB drive. But you need to do that after you download, but before you install. One of the better explanations was HOW TO: Do a Clean Install of OS X Lion

Without thinking, I started burning a DVD, only to realize that the MacBook Air's don't have DVD drives. So I grabbed the thumb drive I had handy and tried to use it. But it was only 4gb and the image didn't fit. I thought I'd have to get a bigger thumb drive but then I remembered I had a small USB hard disk that I use for backing up photos when travelling. It was more than big enough.

The install went smoothly. Again, I didn't time it, but it was something like half an hour.

The next morning, when I tried to start Eclipse to do some programming, I got "no JRE installed" and it asked if I wanted to install one. I said yes and after quite a long pause (30 seconds?) it installed one and Eclipse started. Not bad!

I needed to reselect the JRE in Eclipse. Then a test failed because I'd forgotten to enable asserts on that JRE. But after that, all the tests passed. So far so good.

Then I found Mercurial was broken. A quick internet search found Mercurial SCM (hg) fix for OS X 10.7 Lion. Even better, I read to the bottom before I tried the fix and found there was an updated installer for Mercurial on Lion. I downloaded and installed it and Mercurial was back in business.

It did take quite a while to re-index for Spotlight searching but that happened in the background.

iTunes gave me a rather frightening message about not being able to save my music library, but it seems to be working fine.

Those are really the only problems I've had so far. Not too bad, considering.

I updated my MacBook using the USB hard disk. It went smoothly also.

One change that is going to take some getting used to is the reversing of the scroll direction. Admittedly, the old way does seem backwards - pulling your finger down to scroll up. Now it's the same as the iPhone or iPad, with a metaphor of dragging the page around. But old habits are hard to break and I keep trying to scroll the wrong direction. And I still use Windows at work which will be the opposite way, although with a mouse wheel rather than swiping on a Magic Mouse.

I can't imagine Microsoft having the guts to make this kind of change. Nor can I imagine Microsoft users standing for it - they would just flip the setting to "Classic" mode. (The same way most of my staff immediately switched new versions of Windows to the old Classic appearance, despite my protests.) It would be interesting to know how many Mac users switch it back to the old way.

There will probably be other issues I haven't run into yet, but so far it's been a pretty smooth update.

I wasn't really paying attention, but it took about an hour to download the 4gb update from the App Store.

I knew I had four machines to update (my iMac and MacBook, and Shelley's iMac and MacBook) so I looked up online how to update multiple machines from a single download.

The trick is that there is a disk image inside the download that you can use to make a bootable DVD or USB drive. But you need to do that after you download, but before you install. One of the better explanations was HOW TO: Do a Clean Install of OS X Lion

Without thinking, I started burning a DVD, only to realize that the MacBook Air's don't have DVD drives. So I grabbed the thumb drive I had handy and tried to use it. But it was only 4gb and the image didn't fit. I thought I'd have to get a bigger thumb drive but then I remembered I had a small USB hard disk that I use for backing up photos when travelling. It was more than big enough.

The install went smoothly. Again, I didn't time it, but it was something like half an hour.

The next morning, when I tried to start Eclipse to do some programming, I got "no JRE installed" and it asked if I wanted to install one. I said yes and after quite a long pause (30 seconds?) it installed one and Eclipse started. Not bad!

I needed to reselect the JRE in Eclipse. Then a test failed because I'd forgotten to enable asserts on that JRE. But after that, all the tests passed. So far so good.

Then I found Mercurial was broken. A quick internet search found Mercurial SCM (hg) fix for OS X 10.7 Lion. Even better, I read to the bottom before I tried the fix and found there was an updated installer for Mercurial on Lion. I downloaded and installed it and Mercurial was back in business.

It did take quite a while to re-index for Spotlight searching but that happened in the background.

iTunes gave me a rather frightening message about not being able to save my music library, but it seems to be working fine.

Those are really the only problems I've had so far. Not too bad, considering.

I updated my MacBook using the USB hard disk. It went smoothly also.

One change that is going to take some getting used to is the reversing of the scroll direction. Admittedly, the old way does seem backwards - pulling your finger down to scroll up. Now it's the same as the iPhone or iPad, with a metaphor of dragging the page around. But old habits are hard to break and I keep trying to scroll the wrong direction. And I still use Windows at work which will be the opposite way, although with a mouse wheel rather than swiping on a Magic Mouse.

I can't imagine Microsoft having the guts to make this kind of change. Nor can I imagine Microsoft users standing for it - they would just flip the setting to "Classic" mode. (The same way most of my staff immediately switched new versions of Windows to the old Classic appearance, despite my protests.) It would be interesting to know how many Mac users switch it back to the old way.

There will probably be other issues I haven't run into yet, but so far it's been a pretty smooth update.

Wednesday, July 20, 2011

Java BufferedInputStream Problem

I ran into a problem loading a large database dump from cSuneido into jSuneido.

After several hours of debugging, I found if I changed this:

InputStream fin = new BufferedInputStream(

new FileInputStream(filename));

to:

InputStream fin = new FileInputStream(filename);

(i.e. removed the BufferedInputStream)

Then it worked ?!

I searched on the web for BufferedInputStream problems but didn't find anything relevant.

One thing that is suspicious is that this is probably the first file I've tried to load that is larger than 4 gb (i.e. the limit for a 32 bit integer), although I'm not sure why that would be a problem for BufferedInputStream. You'd think it would just be reading sequentially. Unless it's trying to do something clever like memory mapping the whole file.

Note: This is on a 64 bit JVM.

I'm just going to omit the BufferedInputStream for now, but it would be nice to know why there was a problem.

I guess I could go dig up the OpenJDK code for BufferedInputStream but I'm not that motivated.

Anybody have any information or thoughts on this?

After several hours of debugging, I found if I changed this:

InputStream fin = new BufferedInputStream(

new FileInputStream(filename));

to:

InputStream fin = new FileInputStream(filename);

(i.e. removed the BufferedInputStream)

Then it worked ?!

I searched on the web for BufferedInputStream problems but didn't find anything relevant.

One thing that is suspicious is that this is probably the first file I've tried to load that is larger than 4 gb (i.e. the limit for a 32 bit integer), although I'm not sure why that would be a problem for BufferedInputStream. You'd think it would just be reading sequentially. Unless it's trying to do something clever like memory mapping the whole file.

Note: This is on a 64 bit JVM.

I'm just going to omit the BufferedInputStream for now, but it would be nice to know why there was a problem.

I guess I could go dig up the OpenJDK code for BufferedInputStream but I'm not that motivated.

Anybody have any information or thoughts on this?

Tuesday, July 19, 2011

Large Scale Java Design

I ran into a problem when I reached the point where I wanted to start integrating my append-only database storage engine into the rest of Suneido.

I ran into a problem when I reached the point where I wanted to start integrating my append-only database storage engine into the rest of Suneido.The problem was that the old storage engine was too tightly coupled with the rest of Suneido. Not very good design on my part, but in my defense, the design came from the old C++ code, much of which is 10 or 15 years old.

So how should the code be structured? What's the best way to use Java packages and interfaces to reduce coupling? I looked for the Java equivalent of Large Scale C++ Software Design by John Lakos. (Seeing that it was published in 1996, I guess I don't really have an excuse for the poor structure of my old code!) But there doesn't seemed to be an equivalent for Java. (Let me know if you have suggestions.)

There are books like Effective Java but they're mostly about small scale. And there are books about J2EE architecture, but I'm not using J2EE.

Books like Growing Object-Oriented Software (recommended) talk about coupling from the view point of testability, but don't explicitly give guidelines for high level architecture.

In addition, what I needed wasn't just less coupling. I needed to be able to configure the code to run with either storage engine. For that, there couldn't be any static coupling between the storage engine and the rest of the code.

One really crude way to do this would be to simply rename one of the two storage engine packages to be the name referenced by the rest of the code. That would require changing every source file. Eclipse would do that, but it wouldn't play well with version control. And I would have to manually ensure that the two packages were defined the same public methods.

Instead I decided to clean up my architecture. In case it's helpful to anyone else, I'll sketch out what I came up with. Some of it is standard advice, some is a little more specific to what I needed. None of it is particularly original or unique.

Instead I decided to clean up my architecture. In case it's helpful to anyone else, I'll sketch out what I came up with. Some of it is standard advice, some is a little more specific to what I needed. None of it is particularly original or unique.First, other packages (i.e. the rest of the system) should only reference interfaces that are defined in a separate interface package.

That means no direct field access, you have to use setters and getters for public access.

It also means no public static methods or constructors. Instead there is an interface for a "package" object. A singleton instance of the package object is created at startup. All access to the package starts here. So to use the new storage engine you just have to use its package object. This class contains any public constructors and static methods and is the only public class in the package.

One mistake I made, coming new to Java from C++, was to make methods either private or public. I should have been using package scope a lot more than public. (Which might explain why it's the default in Java!) Now, the only methods that are public are the ones implementing public interfaces.

It's nice that Java (since version 5 / 1.5) allows covariant return types. i.e. the public interface can say that a method returns another public interface, but the actual method implementation can return the concrete type. This reduces the need for casting within the package.

I've finished refactoring so the old storage engine code follows these guidelines. I thought I might run into areas that would take a lot of redesign, but it's gone surprisingly smoothly.

Next I have to refactor the new append-only storage engine to implement the same interfaces. Then I should be able to easily switch between the two.

Of course, the interface I've extracted from the old storage engine doesn't match the new storage engine so I'll have to do some refactoring to end up with a common interface.

As usual, the code is public in Suneido's Mercurial repository on SourceForge.

Tuesday, July 12, 2011

The LMAX Architecture

A good article by Martin Fowler on a very interesting architecture.

Thursday, July 07, 2011

Dreaded Deadlock

I was doing some refactoring and came across a stress test that I hadn't run for a while. (it's too slow to include in my unit test suite)

I ran it and it hung up. What the ...?

I thought maybe it was my refactoring but I reverted my changes and it still hung.

The test is multithreaded and using the Eclipse debugger I could see the threads were all hung at the some spot. It wasn't an infinite loop, but it was a synchronized method.

I used jConsole's deadlock detector to confirm what was going on.

Suspiciously, I had recently made a "minor" change to this exact part of the code.

The funny part was that I made the change because FindBugs reported a potential concurrency problem.

I removed the synchronized and used volatile instead and that fixed the problem. I think volatile is ok in this instance, but it's always hard to know for sure.

I think FindBugs was correct that there was a problem. My mistake was slapping in a fix without really thinking it through. That's definitely a no-no when it comes to concurrency. It's a good example of how the "intuition" that adding more locks can't hurt is very wrong.

My second mistake was not running the related stress tests when I made the change. I need to add the stress tests to our continuous build system at work. And maybe set up something at home as well, if I can make it lightweight enough.

Yet another reminder that concurrency is hard! (not that I should have needed the reminder)

I ran it and it hung up. What the ...?

I thought maybe it was my refactoring but I reverted my changes and it still hung.

The test is multithreaded and using the Eclipse debugger I could see the threads were all hung at the some spot. It wasn't an infinite loop, but it was a synchronized method.

I used jConsole's deadlock detector to confirm what was going on.

Suspiciously, I had recently made a "minor" change to this exact part of the code.

The funny part was that I made the change because FindBugs reported a potential concurrency problem.

I removed the synchronized and used volatile instead and that fixed the problem. I think volatile is ok in this instance, but it's always hard to know for sure.

I think FindBugs was correct that there was a problem. My mistake was slapping in a fix without really thinking it through. That's definitely a no-no when it comes to concurrency. It's a good example of how the "intuition" that adding more locks can't hurt is very wrong.

My second mistake was not running the related stress tests when I made the change. I need to add the stress tests to our continuous build system at work. And maybe set up something at home as well, if I can make it lightweight enough.

Yet another reminder that concurrency is hard! (not that I should have needed the reminder)

Thursday, June 30, 2011

Mockito for Suneido

From the Couch 12 - Mockito for Suneido

I've been using Mockito for writing tests for jSuneido and I really like it.

So I decided to write something like it for Suneido ...more

I've been using Mockito for writing tests for jSuneido and I really like it.

So I decided to write something like it for Suneido ...more

Saturday, June 25, 2011

Upgrading to Eclipse Indigo

The latest version of the Eclipse IDE, version 3.7 named Indigo, was release a few days ago.

For me, this was the smoothest upgrade so far. My jSuneido project still seems to build just fine, and the tests still all succeed.

All but one of the plugins I use was available through the Eclipse Marketplace (under the Help menu). Going through the Marketplace is a lot easier than the old style of entering a URL for an update site. The plugin that was not available has not been marked as compatible with Indigo, not surprising as it doesn't seem to be under active development. There are other metrics plugins, but the nice thing about this one is that it also displayed dependencies graphs. But it's probably the plugin I use least, so it wasn't a big deal. The other plugins I use (that were available) are: Bytecode Outline (for ASM), EclEmma Code Coverage, FindBugs, and MercurialEclipse.

I haven't really noticed any major improvements, but I'm sure there are some.

The only minor annoyance I noticed was that it spent a long time at first downloading the Maven indexes. Maven support is built in now, but my project doesn't use it, so I'm not sure why it needed to download the indexes. But even this wasn't a big deal since it happened in the background. (I just noticed it in the Progress view and in my network activity.) I tried to use Maven another time but just made a mess and gave up. Maybe I should try again now that support is built in.

If my experience is typical, then I wouldn't be afraid to upgrade.

For me, this was the smoothest upgrade so far. My jSuneido project still seems to build just fine, and the tests still all succeed.

All but one of the plugins I use was available through the Eclipse Marketplace (under the Help menu). Going through the Marketplace is a lot easier than the old style of entering a URL for an update site. The plugin that was not available has not been marked as compatible with Indigo, not surprising as it doesn't seem to be under active development. There are other metrics plugins, but the nice thing about this one is that it also displayed dependencies graphs. But it's probably the plugin I use least, so it wasn't a big deal. The other plugins I use (that were available) are: Bytecode Outline (for ASM), EclEmma Code Coverage, FindBugs, and MercurialEclipse.

I haven't really noticed any major improvements, but I'm sure there are some.

The only minor annoyance I noticed was that it spent a long time at first downloading the Maven indexes. Maven support is built in now, but my project doesn't use it, so I'm not sure why it needed to download the indexes. But even this wasn't a big deal since it happened in the background. (I just noticed it in the Progress view and in my network activity.) I tried to use Maven another time but just made a mess and gave up. Maybe I should try again now that support is built in.

If my experience is typical, then I wouldn't be afraid to upgrade.

Subscribe to:

Posts (Atom)