I use Shift+Command+4 all the time to take screenshots on my Mac.

What I found out recently is that if you press the space bar after Shift+Command+4 then you can capture an entire window or pull down menu, without having to try to manually select its area without cutting off any pixels or including any extra.

Strangely, this doesn't seem to be mentioned in the Mac OS X Leopard Pocket Guide.

I use Techsmith Snagit on Windows and it has a similar option, but I like the Mac keyboard shortcut since it's always available without having to start another program. (I could leave Snagit running, but I prefer to not to have too much stuff running all the time.)

Wednesday, December 31, 2008

Double Speed

We have accumulated quite a large suite of tests for our Suneido applications. It takes about 100 seconds to run them on my work PC.

I happened to run the tests on my iMac (on Vista under Parallels under OS X) and was surprised to see they only took about 50 seconds - twice as fast! My iMac is newer, but I didn't realize it was such a big difference.

One of my rules of thumb over the years has been not to buy a new computer until I could get one twice as fast (for a reasonably priced mainstream machine). No point going through a painful upgrade for a barely noticeable 15 or 20% speed increase.

I usually like to go for the high end of the "normal" range. Buying the low end reduces the lifetime of the machine - it's out of date before you get it. And I'm not going to try to build the "ultimate" machine. You pay too big a premium and have too many problems because you're breaking new ground. And by the time you get it, it's not even ultimate any more.

Doubling the speed has gotten tougher over the years. CPU speeds have leveled out and overall speed is often limited by memory and disk since they haven't improved as much as CPU speeds.

The trend now is towards multi-core, which is great. But when you're still running single-threaded software (like Suneido, unfortunately) then multiple cores don't help much. And the early multi-core cpu's tended to have slower clock speeds and could actually perform worse with single-threaded software.

It's also gotten tougher to judge speed. In the past you could judge by the clock rate - 400 mhz was roughly twice as fast as 200 mhz. (I'm dating myself) But now there are a lot more factors involved - like caches and pipelines and bus speeds. And power consumption has also become more important.

Anyway, my rule of thumb has now triggered and it's time to look for a new machine. I decided I'd look for something small, quiet, and energy efficient yet still reasonably fast (i.e. as fast as my iMac). I looked at the Dell Studio Hybrid and the Acer Veriton L460-ED8400. The Dell is smaller and lower power but more expensive and not as fast - it uses a mobile cpu. The Acer is not as sexy looking but it's faster and cheaper. Both have slot loading DVD drives. For a media computer the Dell has a memory card reader, HDMI output, and you can get it with a Blu-ray drive. The Acer has gigabit ethernet and wireless built in.

Neither machine came with a big enough hard drive. These days I figure 500gb is decent. The Acer only had a 160 gb drive but at least it was 7200 rpm. The Dell offered 250 or 320 gb drives but only 5400 rpm. It seems a little strange considering how cheap drives are.

Ironically, I now find I need more disk space at home (for photos and music) than I do at work. Source code and programs are small. And my email and documents mostly live in the cloud.

I ended up going with the Acer plus upgrading the memory to 4 gb and the drive to 500 gb for about $1000. I paid a bit of a premium to order from a company I'd dealt with before. You can get cheaper machines these days, but it's still a lot cheaper than my iMac.

There are probably a bunch of other alternatives I should have looked at. But I don't have the inclination to spend my time that way. And the paradox of choice tells us that more alternative don't make us happier, in fact often the opposite.

I've also been wanting to run Vista. I know, the rest of the world is trying to downgrade to XP, but I've been running Vista on my Mac (under Parallels) and I don't have a problem with it. It's got some good features. I'm not a huge Windows fan to start with. I was thinking about trying Vista 64 but these machines didn't come with it and I hear there are still driver problems. So to keep things simple I'll stick to Vista 32.

One challenge will be to swap in the bigger hard drive. I assume Windows will come preinstalled on the hard drive in the computer and I won't get an install disk. Normally you'd plug the second hard drive in and copy everything over. But I suspect these small computers don't have room for a second drive. I should be able to plug both drives into another bigger machine that can take multiple drives and do the copy there.

It's always exciting (for a geek) to get a new machine. Of course, the part I'm trying not to think about is getting the new machine configured the way I want it and all my files moved over and software re-installed. Oh well, it's always a good opportunity to clean up and drop a lot of the junk you accumulate over time.

I happened to run the tests on my iMac (on Vista under Parallels under OS X) and was surprised to see they only took about 50 seconds - twice as fast! My iMac is newer, but I didn't realize it was such a big difference.

One of my rules of thumb over the years has been not to buy a new computer until I could get one twice as fast (for a reasonably priced mainstream machine). No point going through a painful upgrade for a barely noticeable 15 or 20% speed increase.

I usually like to go for the high end of the "normal" range. Buying the low end reduces the lifetime of the machine - it's out of date before you get it. And I'm not going to try to build the "ultimate" machine. You pay too big a premium and have too many problems because you're breaking new ground. And by the time you get it, it's not even ultimate any more.

Doubling the speed has gotten tougher over the years. CPU speeds have leveled out and overall speed is often limited by memory and disk since they haven't improved as much as CPU speeds.

The trend now is towards multi-core, which is great. But when you're still running single-threaded software (like Suneido, unfortunately) then multiple cores don't help much. And the early multi-core cpu's tended to have slower clock speeds and could actually perform worse with single-threaded software.

It's also gotten tougher to judge speed. In the past you could judge by the clock rate - 400 mhz was roughly twice as fast as 200 mhz. (I'm dating myself) But now there are a lot more factors involved - like caches and pipelines and bus speeds. And power consumption has also become more important.

Anyway, my rule of thumb has now triggered and it's time to look for a new machine. I decided I'd look for something small, quiet, and energy efficient yet still reasonably fast (i.e. as fast as my iMac). I looked at the Dell Studio Hybrid and the Acer Veriton L460-ED8400. The Dell is smaller and lower power but more expensive and not as fast - it uses a mobile cpu. The Acer is not as sexy looking but it's faster and cheaper. Both have slot loading DVD drives. For a media computer the Dell has a memory card reader, HDMI output, and you can get it with a Blu-ray drive. The Acer has gigabit ethernet and wireless built in.

Neither machine came with a big enough hard drive. These days I figure 500gb is decent. The Acer only had a 160 gb drive but at least it was 7200 rpm. The Dell offered 250 or 320 gb drives but only 5400 rpm. It seems a little strange considering how cheap drives are.

Ironically, I now find I need more disk space at home (for photos and music) than I do at work. Source code and programs are small. And my email and documents mostly live in the cloud.

I ended up going with the Acer plus upgrading the memory to 4 gb and the drive to 500 gb for about $1000. I paid a bit of a premium to order from a company I'd dealt with before. You can get cheaper machines these days, but it's still a lot cheaper than my iMac.

There are probably a bunch of other alternatives I should have looked at. But I don't have the inclination to spend my time that way. And the paradox of choice tells us that more alternative don't make us happier, in fact often the opposite.

I've also been wanting to run Vista. I know, the rest of the world is trying to downgrade to XP, but I've been running Vista on my Mac (under Parallels) and I don't have a problem with it. It's got some good features. I'm not a huge Windows fan to start with. I was thinking about trying Vista 64 but these machines didn't come with it and I hear there are still driver problems. So to keep things simple I'll stick to Vista 32.

One challenge will be to swap in the bigger hard drive. I assume Windows will come preinstalled on the hard drive in the computer and I won't get an install disk. Normally you'd plug the second hard drive in and copy everything over. But I suspect these small computers don't have room for a second drive. I should be able to plug both drives into another bigger machine that can take multiple drives and do the copy there.

It's always exciting (for a geek) to get a new machine. Of course, the part I'm trying not to think about is getting the new machine configured the way I want it and all my files moved over and software re-installed. Oh well, it's always a good opportunity to clean up and drop a lot of the junk you accumulate over time.

Saturday, December 27, 2008

Nice UI Feature

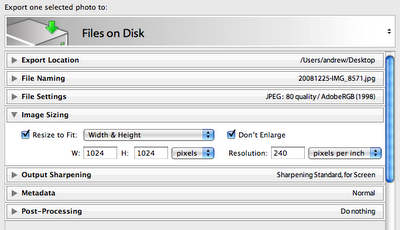

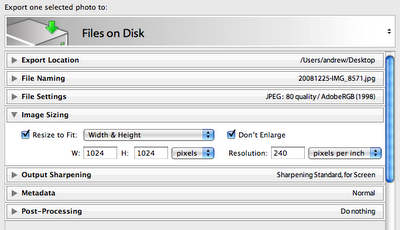

Here's a screen shot of part of the Adobe Lightroom Export dialog:

It consists of a bunch of sections that you can open and close. The part I like is that when they are closed they show a summary of the settings from that section. So you can leave sections closed but still see what you have chosen. Very nice. I haven't noticed this in any programs.

It consists of a bunch of sections that you can open and close. The part I like is that when they are closed they show a summary of the settings from that section. So you can leave sections closed but still see what you have chosen. Very nice. I haven't noticed this in any programs.

One minor criticism is that the "mode", in this case "Files on Disk" is chosen with the small, easily missed up/down arrow at the top right. I didn't figure this out for a while - I thought the only way to change it was to choose different presets (not shown in the screenshot)

The other thing I have trouble with when exporting is that I forget to select all the pictures. It does tell you at the top that it is only exporting one selected photo, but I always seem to miss this. But when it completes way faster than I expect, then I realize I forgot to select all the photos. Doh! It might be nice if the export dialog had a way to choose "All photos in collection" like the Slideshow module has.

Another nice part of Lightroom's export is that they have a facility for "plugins". I use Jeffrey Friedl's plugin for exporting to Google Picasa Web albums. There are also plugins for other things like Flickr.

It consists of a bunch of sections that you can open and close. The part I like is that when they are closed they show a summary of the settings from that section. So you can leave sections closed but still see what you have chosen. Very nice. I haven't noticed this in any programs.

It consists of a bunch of sections that you can open and close. The part I like is that when they are closed they show a summary of the settings from that section. So you can leave sections closed but still see what you have chosen. Very nice. I haven't noticed this in any programs.One minor criticism is that the "mode", in this case "Files on Disk" is chosen with the small, easily missed up/down arrow at the top right. I didn't figure this out for a while - I thought the only way to change it was to choose different presets (not shown in the screenshot)

The other thing I have trouble with when exporting is that I forget to select all the pictures. It does tell you at the top that it is only exporting one selected photo, but I always seem to miss this. But when it completes way faster than I expect, then I realize I forgot to select all the photos. Doh! It might be nice if the export dialog had a way to choose "All photos in collection" like the Slideshow module has.

Another nice part of Lightroom's export is that they have a facility for "plugins". I use Jeffrey Friedl's plugin for exporting to Google Picasa Web albums. There are also plugins for other things like Flickr.

Friday, December 26, 2008

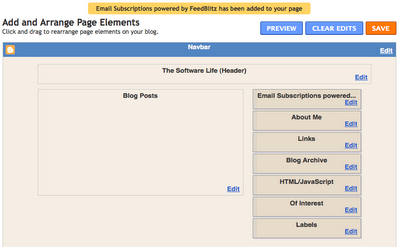

Add Email Subscription to Blogger

It's hard to convince some people to use a feed reader like Google Reader. That includes some of my family. There had to be a better way than manually emailing them every time I posted! Besides, it never hurts to give people options to work the way they prefer.

I noticed an email widget on Seth Godin's blog from FeedBlitz. It's free for personal use and turned out to be fairly easy to add.

Here's how:

- go to feedblitz.com

- click on Try FeedBlitz Now! and sign up

- click on Forms and Widgets

- click on Email Subscription Widgets > New Blogger Widget

- enter your email address and the verification, then click on "Install Your Email Subscription Widget On Blogger"

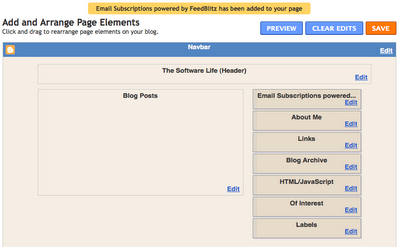

- you may have to log in to Blogger if you're not already, then you should get to:

- choose Add Widget and you should get to your layout

- look for a widget labeled "Email Subscriptions powered..." and drag it to where you want it

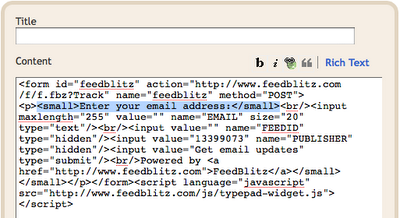

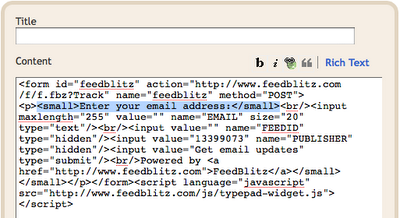

- to customize the appearance, choose Edit

- I removed the Title and changed the code slightly. (Note: after removing the title it will show up in the layout as just "HTML/Javascript") Since you're getting the service for free, I figure it's only fair to leave the "Powered by FeedBlitz". Be careful not to change anything else.

- If it looks ok in Preview you can choose Save and you're finished. If you mess something up, just don't click Save.

I noticed an email widget on Seth Godin's blog from FeedBlitz. It's free for personal use and turned out to be fairly easy to add.

Here's how:

- go to feedblitz.com

- click on Try FeedBlitz Now! and sign up

- click on Forms and Widgets

- click on Email Subscription Widgets > New Blogger Widget

- enter your email address and the verification, then click on "Install Your Email Subscription Widget On Blogger"

- you may have to log in to Blogger if you're not already, then you should get to:

- choose Add Widget and you should get to your layout

- look for a widget labeled "Email Subscriptions powered..." and drag it to where you want it

- to customize the appearance, choose Edit

- I removed the Title and changed the code slightly. (Note: after removing the title it will show up in the layout as just "HTML/Javascript") Since you're getting the service for free, I figure it's only fair to leave the "Powered by FeedBlitz". Be careful not to change anything else.

- If it looks ok in Preview you can choose Save and you're finished. If you mess something up, just don't click Save.

Tuesday, December 23, 2008

jSuneido Slogging

Although I haven't been writing about it lately, I have been plugging away at jSuneido.

Once I could run the IDE (more or less) from jSuneido I could run the stdlib tests. Many of which, of course, failed.

So I've been fixing bugs, gradually getting tests to pass.

On a good day it's tedious and unglamorous. Any satisfaction from getting one test to pass is almost immediately extinguished as you move on to the next failing one. It's depressing being forced to confront all your screwups, one after another, for days on end.

On a bad day, like today, it's incredibly frustrating. I had the distinct urge to smash something, and honestly, I'm not usually the kind of person that wants to smash things.

It seemed like everything I touched broke umpteen other things. Things that used to work no longer worked. Eclipse wasn't working right, Parallels wasn't working right, my brain wasn't working right.

If it was someone else's code I could curse them and fantasize about how much better it would be if I had written it. But when it's your own code, and you've just finished rewriting it, the only conclusion you can draw is that you're an idiot!

The actually bugs, once I found them, haven't been major. Just stupid things like off by one errors. Quick, is Java Calendar MONTH zero based or one based? Many of them are incompatibilities between cSuneido and jSuneido (which is why my jSuneido tests didn't catch them).

Some of the date code worked in the morning but not in the afternoon. WTF! Guess what, Java Calendar HOUR is 12 hour, for 24 hour you need to use HOUR_OF_DAY. Argh! RTFM

I have about 20 tests still failing. Hopefully it won't take too many more days to clean them up.

Once I could run the IDE (more or less) from jSuneido I could run the stdlib tests. Many of which, of course, failed.

So I've been fixing bugs, gradually getting tests to pass.

On a good day it's tedious and unglamorous. Any satisfaction from getting one test to pass is almost immediately extinguished as you move on to the next failing one. It's depressing being forced to confront all your screwups, one after another, for days on end.

On a bad day, like today, it's incredibly frustrating. I had the distinct urge to smash something, and honestly, I'm not usually the kind of person that wants to smash things.

It seemed like everything I touched broke umpteen other things. Things that used to work no longer worked. Eclipse wasn't working right, Parallels wasn't working right, my brain wasn't working right.

If it was someone else's code I could curse them and fantasize about how much better it would be if I had written it. But when it's your own code, and you've just finished rewriting it, the only conclusion you can draw is that you're an idiot!

The actually bugs, once I found them, haven't been major. Just stupid things like off by one errors. Quick, is Java Calendar MONTH zero based or one based? Many of them are incompatibilities between cSuneido and jSuneido (which is why my jSuneido tests didn't catch them).

Some of the date code worked in the morning but not in the afternoon. WTF! Guess what, Java Calendar HOUR is 12 hour, for 24 hour you need to use HOUR_OF_DAY. Argh! RTFM

I have about 20 tests still failing. Hopefully it won't take too many more days to clean them up.

Thursday, December 18, 2008

Mac OS X + Epson R1800 = dark prints

I decided I should print some Christmas cards. But when I print the picture I want, it's way too dark. Argh!

This is an ongoing problem. The last batch of printing I did I settled on managing color on the printer (so I could make the following adjustments), setting the gamma to 1.5 (instead of 1.8 or 2.2), and cranking the brightness to the max of +25.

But even with those extreme adjustments, my current print was way too dark. (And it goes against most recommendations to manage color in the computer.)

Lots of other people have this problem (try Google). Leopard seems to have made the problem worse. Epson doesn't seem to want or be able to fix it. Nor are Apple or Adobe any help. On the other hand, it seems to work ok for some (many? most?) people. For some people the problem occurs in Lightroom but not Photoshop, but I get the same results from both.

A lot of the responses to this problem tell people it's because their monitor isn't calibrated. Maybe that's the case some of the time, but if my histogram is correct then the monitor has nothing to do with it. And I have the brightness on my 24" iMac turned down to the minimum. And the images look fine on other monitors as well.

Suggested "fixes" range from using the Gutenprint drivers (which don't support all the printer features) to reinstalling OS X (yikes!). Some people seem to have had success using the older version 3 driver.

I tried some of the common suggestions - deleting my Library/Printers/Epson folder, resetting the print subsystem, emptying the trash (?), restarting (just like Windows!), reinstaling the driver ... I did find there was an update to the 6.12 driver so I installed that as well.

I'm not sure how much difference it made. I now seem to be getting better results managing color on the computer (as most people recommend) rather than the printer. But I still had to adjust the image to the point of looking ugly on the screen (overexposing by .3) in order to get a decent print, which is something I really wanted to avoid. Maybe I can make a preset to make it easier to adjust for printing.

Judging from Google, there seem to be fewer problems with the newer R1900 but I really hate to replace a printer that has nothing physically wrong with it (AFAIK) , just to get around a software problem! And the R1800 isn't that old a model.

It's a frustrating problem because there are so many variables and it's very hard to objectively evaluate the results. I just changed the exposure from +.3 to +.5 making it lighter and I swear the print got slightly darker!

This is an ongoing problem. The last batch of printing I did I settled on managing color on the printer (so I could make the following adjustments), setting the gamma to 1.5 (instead of 1.8 or 2.2), and cranking the brightness to the max of +25.

But even with those extreme adjustments, my current print was way too dark. (And it goes against most recommendations to manage color in the computer.)

Lots of other people have this problem (try Google). Leopard seems to have made the problem worse. Epson doesn't seem to want or be able to fix it. Nor are Apple or Adobe any help. On the other hand, it seems to work ok for some (many? most?) people. For some people the problem occurs in Lightroom but not Photoshop, but I get the same results from both.

A lot of the responses to this problem tell people it's because their monitor isn't calibrated. Maybe that's the case some of the time, but if my histogram is correct then the monitor has nothing to do with it. And I have the brightness on my 24" iMac turned down to the minimum. And the images look fine on other monitors as well.

Suggested "fixes" range from using the Gutenprint drivers (which don't support all the printer features) to reinstalling OS X (yikes!). Some people seem to have had success using the older version 3 driver.

I tried some of the common suggestions - deleting my Library/Printers/Epson folder, resetting the print subsystem, emptying the trash (?), restarting (just like Windows!), reinstaling the driver ... I did find there was an update to the 6.12 driver so I installed that as well.

I'm not sure how much difference it made. I now seem to be getting better results managing color on the computer (as most people recommend) rather than the printer. But I still had to adjust the image to the point of looking ugly on the screen (overexposing by .3) in order to get a decent print, which is something I really wanted to avoid. Maybe I can make a preset to make it easier to adjust for printing.

Judging from Google, there seem to be fewer problems with the newer R1900 but I really hate to replace a printer that has nothing physically wrong with it (AFAIK) , just to get around a software problem! And the R1800 isn't that old a model.

It's a frustrating problem because there are so many variables and it's very hard to objectively evaluate the results. I just changed the exposure from +.3 to +.5 making it lighter and I swear the print got slightly darker!

Monday, December 15, 2008

Mac OS X on Asus Eee PC

http://www.maceee.com

I've been curious about the new "netbooks". I usually want a more powerful machine, even when traveling (e.g. to edit photos), but I like the idea of something small when I mainly want internet access. For that kind of use I'm not sure OS X is enough of a benefit to warrant the hacking. I think I'd be more tempted to use Linux if I wanted to avoid Windows.

I've been curious about the new "netbooks". I usually want a more powerful machine, even when traveling (e.g. to edit photos), but I like the idea of something small when I mainly want internet access. For that kind of use I'm not sure OS X is enough of a benefit to warrant the hacking. I think I'd be more tempted to use Linux if I wanted to avoid Windows.

Thursday, November 27, 2008

Burnt by ByteBuffer

In my last post about this, I had "solved" my ByteBuffer problem rather crudely, by setting the position back to zero. But I wasn't really happy with that - I figured I should know where the position was getting modified.

Strangely, the bug only showed up when I had debugging code in place. (Which is why the bug didn't show up until I put in debugging code to track down a different problem!) That told me that it was probably the debugging code itself that was changing the ByteBuffer position.

I started putting in asserts to check the position. The result baffled me at first. Here's what I had:

A classic example of why mutable state can cause problems. So not only does ByteBuffer burden you with this extra baggage, but it's also error prone baggage. Granted, it's my own fault for using it improperly.

The fix was easy. I quit using mark() and reset() and instead did:

I almost got burnt another way. I had used buf.rewind() to set the position back to zero. When I read the documentation more closely I found out that rewind() also clears the position saved by mark(). So if a nested method had called rewind() that would also have "broken" my use of mark/reset.

Oh well, I found the problem and fixed it, and now I know to be more careful. On to the next bug!

PS. It's annoying how Blogger loses the line spacing after any kind of block tag (like pre).

Strangely, the bug only showed up when I had debugging code in place. (Which is why the bug didn't show up until I put in debugging code to track down a different problem!) That told me that it was probably the debugging code itself that was changing the ByteBuffer position.

I started putting in asserts to check the position. The result baffled me at first. Here's what I had:

int pos = buf.position();Since mark() saves the position and reset() restores the position, I figured this should never fail. But it did! It turns out, what was happening was this:

buf.mark();

...

buf.reset();

assert(pos == buf.position());

method1: method2:The problem was that the nested mark() in method2 was overwriting the first mark(). So the outer reset() was restoring to the nested position, not to its own mark.

buf.mark();

... buf.mark();

method2(); ...

... buf.reset();

buf.reset();

A classic example of why mutable state can cause problems. So not only does ByteBuffer burden you with this extra baggage, but it's also error prone baggage. Granted, it's my own fault for using it improperly.

The fix was easy. I quit using mark() and reset() and instead did:

int pos = buf.position();That solved the problem.

...

buf.position(pos);

I almost got burnt another way. I had used buf.rewind() to set the position back to zero. When I read the documentation more closely I found out that rewind() also clears the position saved by mark(). So if a nested method had called rewind() that would also have "broken" my use of mark/reset.

Oh well, I found the problem and fixed it, and now I know to be more careful. On to the next bug!

PS. It's annoying how Blogger loses the line spacing after any kind of block tag (like pre).

Computers Not for Dummies

As much as we've progressed, computers still aren't always easy enough to use.

A few days ago I borrowed Shelley's Windows laptop to use to connect to my jSuneido server.

Of course, as soon as I fired it up it wanted to download and install updates, which I let it do. I thought I was being nice installing updates for her. But when I was done, the wireless wouldn't connect. It had been working fine up till then (that's how I got the updates). I just wrote it off to the usual unknown glitches and left it.

But the next day, Shelley tried to use the wireless and it still wouldn't connect. Oops, now I'm in trouble. I tried restarting the laptop and restarting the Time Capsule (equivalent to an Airport Extreme base station) but no luck. It was late in the evening so I gave up and left it for later.

Actually, the problem wasn't connecting - it would appear to connect just fine, but then it would "fail to acquire a network address" and disconnect. It would repeat this sequence endlessly.

I tried the usual highly skilled "messing around" that makes us techies appear so smart. I deleted the connection. I randomly changed the connection properties. Nothing worked.

Searching on the internet found some similar problems, but no solutions that worked for me.

One of the things I tried was turning off the security on the Time Capsule. That "solved" the problem - I could connect - but it obviously wasn't a good solution.

While I was connected I checked to see if there were any more Windows updates, figuring it was probably a Windows update that broke it, so maybe another Windows update would fix it. But there were no outstanding "critical" updates. Out of curiosity I checked the optional updates and found an update for the network interface driver. That seemed like it might be related.

Thankfully, that solved the problem. I turned the wireless security back on and I could still connect.

It still seems a little strange. Why did a Windows update require a new network interface driver? And if it did, why not make this a little more apparent. And why could it "connect" but not get an address? If the security was failing, couldn't it say that? And why does the hardware driver stop the security working? Is the security re-implemented in every driver? That doesn't make much sense.

But to get back to my original point, how would the average non-technical person figure out this kind of problem? Would they think to disable security temporarily (or connect with an actual cable) so they could look for optional updates that might help?

Of course, it's not an easy problem. I'd like to blame Microsoft for their troublesome update, but they have an almost infinite problem of trying to work with a huge range of third party hardware and drivers and software. Apple would argue that's one of the benefits of their maintaining control of the hardware, but I've had my share of weird problems on the Mac as well.

A few days ago I borrowed Shelley's Windows laptop to use to connect to my jSuneido server.

Of course, as soon as I fired it up it wanted to download and install updates, which I let it do. I thought I was being nice installing updates for her. But when I was done, the wireless wouldn't connect. It had been working fine up till then (that's how I got the updates). I just wrote it off to the usual unknown glitches and left it.

But the next day, Shelley tried to use the wireless and it still wouldn't connect. Oops, now I'm in trouble. I tried restarting the laptop and restarting the Time Capsule (equivalent to an Airport Extreme base station) but no luck. It was late in the evening so I gave up and left it for later.

Actually, the problem wasn't connecting - it would appear to connect just fine, but then it would "fail to acquire a network address" and disconnect. It would repeat this sequence endlessly.

I tried the usual highly skilled "messing around" that makes us techies appear so smart. I deleted the connection. I randomly changed the connection properties. Nothing worked.

Searching on the internet found some similar problems, but no solutions that worked for me.

One of the things I tried was turning off the security on the Time Capsule. That "solved" the problem - I could connect - but it obviously wasn't a good solution.

While I was connected I checked to see if there were any more Windows updates, figuring it was probably a Windows update that broke it, so maybe another Windows update would fix it. But there were no outstanding "critical" updates. Out of curiosity I checked the optional updates and found an update for the network interface driver. That seemed like it might be related.

Thankfully, that solved the problem. I turned the wireless security back on and I could still connect.

It still seems a little strange. Why did a Windows update require a new network interface driver? And if it did, why not make this a little more apparent. And why could it "connect" but not get an address? If the security was failing, couldn't it say that? And why does the hardware driver stop the security working? Is the security re-implemented in every driver? That doesn't make much sense.

But to get back to my original point, how would the average non-technical person figure out this kind of problem? Would they think to disable security temporarily (or connect with an actual cable) so they could look for optional updates that might help?

Of course, it's not an easy problem. I'd like to blame Microsoft for their troublesome update, but they have an almost infinite problem of trying to work with a huge range of third party hardware and drivers and software. Apple would argue that's one of the benefits of their maintaining control of the hardware, but I've had my share of weird problems on the Mac as well.

Wednesday, November 26, 2008

jSuneido Bugs

After I got to the point where I could start up the IDE on a Windows client from the jSuneido server, I thought it would be a short step to getting the test suite to run. (other than the tests that relied on rules and triggers which aren't implemented yet)

What I should have realized is that running the IDE, while impressive (to me, anyway), doesn't really exercise much of the server. It only involves simple queries, primarily just reading code from the database. Whereas the tests, obviously, exercise more features.

And so I've been plugging away getting the tests to pass, one by one, by fixing bugs in the server. Worthwhile work, just not very exciting.

Most of the bugs from yesterday resulted from me "improving" the code as I ported it from C++ to Java. The problem is, "improving" code that you don't fully understand is a dangerous game. Not surprisingly, I got burnt.

Coincidentally, almost all of yesterdays bugs related to mutable versus immutable data. The C++ code was treating certain data as immutable; it would create a new version rather than change the original. When I ported this code, I thought it would be easier/better to just change the original. The problem was that the data was shared, and changing the original affected all the places it was shared, instead of just the one place where I wanted a different version. Of course, in simple cases (like my tests!) the data wasn't shared and it worked fine.

Some of the other problems involved ByteBuffer. I'm using ByteBuffer as a "safe pointer" to a chunk of memory (which may be memory mapped to a part of the database file). But ByteBuffer has bunch of extra baggage, including a current "position", a "mark position", and a "limit". And it has a bunch of extra methods for dealing with these. It wouldn't be so bad if you could ignore these extras. But you can't, because even simple things like comparing buffers only compare based on the current "position". Of course, it's my own fault because obviously somewhere I'm doing something that changes that position. Bringing me back to the mutability issue.

For the most part I think that the Java libraries are reasonably well designed. Not perfect, but I've seen a lot worse. But for my purposes it would be better if there was a lighter weight "base" version of ByteBuffer without all the extras.

I can see someone saying that I'm "misusing" ByteBuffer, that since I'm coming from C/C++ and I'm trying to get back my beloved pointers. But I don't think that's the case. The reason for using ByteBuffer this way is that it's the only way to handle memory mapped files.

I guess one option would be to limit the use of ByteBuffer to just the memory mapped file io, and to copy the data (e.g. into byte arrays) to use everywhere else. But having to copy everything kind of defeats the purpose of using memory mapped access. Not to mention it would require major surgery on the code :-(

What I should have realized is that running the IDE, while impressive (to me, anyway), doesn't really exercise much of the server. It only involves simple queries, primarily just reading code from the database. Whereas the tests, obviously, exercise more features.

And so I've been plugging away getting the tests to pass, one by one, by fixing bugs in the server. Worthwhile work, just not very exciting.

Most of the bugs from yesterday resulted from me "improving" the code as I ported it from C++ to Java. The problem is, "improving" code that you don't fully understand is a dangerous game. Not surprisingly, I got burnt.

Coincidentally, almost all of yesterdays bugs related to mutable versus immutable data. The C++ code was treating certain data as immutable; it would create a new version rather than change the original. When I ported this code, I thought it would be easier/better to just change the original. The problem was that the data was shared, and changing the original affected all the places it was shared, instead of just the one place where I wanted a different version. Of course, in simple cases (like my tests!) the data wasn't shared and it worked fine.

Some of the other problems involved ByteBuffer. I'm using ByteBuffer as a "safe pointer" to a chunk of memory (which may be memory mapped to a part of the database file). But ByteBuffer has bunch of extra baggage, including a current "position", a "mark position", and a "limit". And it has a bunch of extra methods for dealing with these. It wouldn't be so bad if you could ignore these extras. But you can't, because even simple things like comparing buffers only compare based on the current "position". Of course, it's my own fault because obviously somewhere I'm doing something that changes that position. Bringing me back to the mutability issue.

For the most part I think that the Java libraries are reasonably well designed. Not perfect, but I've seen a lot worse. But for my purposes it would be better if there was a lighter weight "base" version of ByteBuffer without all the extras.

I can see someone saying that I'm "misusing" ByteBuffer, that since I'm coming from C/C++ and I'm trying to get back my beloved pointers. But I don't think that's the case. The reason for using ByteBuffer this way is that it's the only way to handle memory mapped files.

I guess one option would be to limit the use of ByteBuffer to just the memory mapped file io, and to copy the data (e.g. into byte arrays) to use everywhere else. But having to copy everything kind of defeats the purpose of using memory mapped access. Not to mention it would require major surgery on the code :-(

Monday, November 24, 2008

More on Why jSuneido

A recent post by Charles Nutter (the main guy for jRuby) reiterates the advantages of running a language on top of the Java virtual machine.

Thursday, November 20, 2008

jSuneido Slow on OS X - Fixed

Thankfully, I found the problem. Apart from the time wasted, it's somewhat amusing because I was circling around the problem/solution but not quite hitting it.

My first thought was that it was Parallels but I quickly eliminated that.

My next thought was that it was a network issue but if I just did a sequence of invalid commands it was fast.

I woke up in the middle of the night and thought maybe it's the Nagle/Ack problem, and if so, an invalid command wouldn't trigger it because it does a single write. But when I replaced the database calls with stubs (but still doing similar network IO) it was fast, pointing back to the database code.

Ok, maybe it's the memory mapping. I could see that possibly differing between OS X and Windows. But when I swapped out the memory mapping for an in-memory testing version it was still slow.

This isn't making any sense. I still think it seems like a network thing.

I stub out the database calls and it's fast again, implying it's not the network. But in my stubs I'm returning a fixed size record instead of varying sizes like the database would. I change it to return a random size up to 1000 bytes. It's still fast. For no good reason, I change it to up to 2000 bytes and it's slow!

I seem to recall TCP/IP packet size being around 1400 bytes so that's awfully suspicious.

I insert client.socket().setTcpNoDelay(true) into the network server code I'm using and sure enough that solves the problem. (Actually first time around I set it to false, getting confused by the double negative.)

A better solution might be to use gathering writes, but at this point I don't want to get distracted trying to implement this in someone else's code.

This doesn't explain why the problem only showed up on OS X and not on Windows. There must be some difference in the TCP/IP implementation.

In any case, I'm happy to have solved the problem. Now I can get back to work after a several day detour.

My first thought was that it was Parallels but I quickly eliminated that.

My next thought was that it was a network issue but if I just did a sequence of invalid commands it was fast.

I woke up in the middle of the night and thought maybe it's the Nagle/Ack problem, and if so, an invalid command wouldn't trigger it because it does a single write. But when I replaced the database calls with stubs (but still doing similar network IO) it was fast, pointing back to the database code.

Ok, maybe it's the memory mapping. I could see that possibly differing between OS X and Windows. But when I swapped out the memory mapping for an in-memory testing version it was still slow.

This isn't making any sense. I still think it seems like a network thing.

I stub out the database calls and it's fast again, implying it's not the network. But in my stubs I'm returning a fixed size record instead of varying sizes like the database would. I change it to return a random size up to 1000 bytes. It's still fast. For no good reason, I change it to up to 2000 bytes and it's slow!

I seem to recall TCP/IP packet size being around 1400 bytes so that's awfully suspicious.

I insert client.socket().setTcpNoDelay(true) into the network server code I'm using and sure enough that solves the problem. (Actually first time around I set it to false, getting confused by the double negative.)

A better solution might be to use gathering writes, but at this point I don't want to get distracted trying to implement this in someone else's code.

This doesn't explain why the problem only showed up on OS X and not on Windows. There must be some difference in the TCP/IP implementation.

In any case, I'm happy to have solved the problem. Now I can get back to work after a several day detour.

Tuesday, November 18, 2008

jSuneido Slow on OS X

The slowness of jSuneido isn't because of running through Parallels. I tried running the client on two external Windows machines with the same slow results as with Parallels.

Next, in order to eliminate Eclipse as the problem, I figured out how to package jSuneido into a jar file, which turned out to be a simple matter of using Export from Eclipse.

However, when I tried to run the jar file outside Eclipse under OS X, I got an error:

java.lang.NoClassDefFoundError: java/util/ArrayDeque

At first I assumed this was some kind of classpath issue. But after messing with that for a bit I finally realized that it was because the default Java version on OS X is 1.5 and ArrayDeque was introduced in 6 (aka 1.6).

From the web it seems that Java 6 is not well supported on the Mac. Apple has an update to get it, but it still leaves the default as Java 1.5 And the update is only for 64 bit. I didn't come across any good explanation of why Apple is dragging it's feet with Java 6.

I actually already had Java 6 installed since that was what I was using in Eclipse. (Which is why it was working there.)

But ... same problem, painfully slow, running the jar file outside Eclipse (but still on OS X)

Running the jar file on Windows under Parallels was fast, so the problem isn't the Mac hardware (not that I thought it would be).

I'd like to try running jSuneido under Java 1.5 to see if that works any better (since it is the OS X default). But in addition to Deque (which I could probably replace fairly easily) I'm also using methods of TreeMap and TreeSet that aren't available.

What's annoying is that I thought I was protected from this because of the compliance settings in Eclipse:

Maybe I'm misinterpreting these settings, but I expected it to warn me if my code wasn't compatible with Java 1.5

Maybe I'm misinterpreting these settings, but I expected it to warn me if my code wasn't compatible with Java 1.5

So far this doesn't leave me with any good options

- I can run slowly from Eclipse - yuck

- I can package a jar file and copy it to Windows - a slow edit-build-test cycle - yuck

- I can install Eclipse on Windows under Parallels and do all my work there - defeating the purpose of having a Mac - yuck

The real question is still why jSuneido is so slow running on OS X. I assume it's something specific in my code or there would be a lot more complaints. But what? Memory mapping? NIO? And how do I figure it out? Profile? Maybe there are some Java options that would help?

PS. I should mention that it's a major difference in speed, roughly 20x

Next, in order to eliminate Eclipse as the problem, I figured out how to package jSuneido into a jar file, which turned out to be a simple matter of using Export from Eclipse.

However, when I tried to run the jar file outside Eclipse under OS X, I got an error:

java.lang.NoClassDefFoundError: java/util/ArrayDeque

At first I assumed this was some kind of classpath issue. But after messing with that for a bit I finally realized that it was because the default Java version on OS X is 1.5 and ArrayDeque was introduced in 6 (aka 1.6).

From the web it seems that Java 6 is not well supported on the Mac. Apple has an update to get it, but it still leaves the default as Java 1.5 And the update is only for 64 bit. I didn't come across any good explanation of why Apple is dragging it's feet with Java 6.

I actually already had Java 6 installed since that was what I was using in Eclipse. (Which is why it was working there.)

But ... same problem, painfully slow, running the jar file outside Eclipse (but still on OS X)

Running the jar file on Windows under Parallels was fast, so the problem isn't the Mac hardware (not that I thought it would be).

I'd like to try running jSuneido under Java 1.5 to see if that works any better (since it is the OS X default). But in addition to Deque (which I could probably replace fairly easily) I'm also using methods of TreeMap and TreeSet that aren't available.

What's annoying is that I thought I was protected from this because of the compliance settings in Eclipse:

Maybe I'm misinterpreting these settings, but I expected it to warn me if my code wasn't compatible with Java 1.5

Maybe I'm misinterpreting these settings, but I expected it to warn me if my code wasn't compatible with Java 1.5So far this doesn't leave me with any good options

- I can run slowly from Eclipse - yuck

- I can package a jar file and copy it to Windows - a slow edit-build-test cycle - yuck

- I can install Eclipse on Windows under Parallels and do all my work there - defeating the purpose of having a Mac - yuck

The real question is still why jSuneido is so slow running on OS X. I assume it's something specific in my code or there would be a lot more complaints. But what? Memory mapping? NIO? And how do I figure it out? Profile? Maybe there are some Java options that would help?

PS. I should mention that it's a major difference in speed, roughly 20x

Monday, November 17, 2008

Thank Goodness

I was excited when I got to the point where I could start up a Suneido client from my Java Suneido server.

But ... as I soon realized, it was painfully slow. I wasn't panicking since it's still early stages, but it was nagging me. What if, like many people say, Java is just too slow?

I kept forgetting to try it at work on my Windows PC since at home I'm going though the Parallels virtual machine.

Finally I remembered, and ... big sigh of relief ... it's fast on my Windows PC. I haven't done any benchmarks but starting up the IDE seems roughly the same as with the cSuneido server.

I'm not quite sure why it's so slow with Parallels - that's a bit of a nuisance since I work on this mostly at home on my Mac. Maybe something to do with the networking? But at least I don't have a major speed issue (yet).

I'm also still running jSuneido from within Eclipse. That might make a difference too. One of these days I'll have to figure out how to run it outside the IDE!

But ... as I soon realized, it was painfully slow. I wasn't panicking since it's still early stages, but it was nagging me. What if, like many people say, Java is just too slow?

I kept forgetting to try it at work on my Windows PC since at home I'm going though the Parallels virtual machine.

Finally I remembered, and ... big sigh of relief ... it's fast on my Windows PC. I haven't done any benchmarks but starting up the IDE seems roughly the same as with the cSuneido server.

I'm not quite sure why it's so slow with Parallels - that's a bit of a nuisance since I work on this mostly at home on my Mac. Maybe something to do with the networking? But at least I don't have a major speed issue (yet).

I'm also still running jSuneido from within Eclipse. That might make a difference too. One of these days I'll have to figure out how to run it outside the IDE!

Sunday, November 16, 2008

Flailing with Parallels 4

The new version of Parallels is out. I bought it, downloaded it, and installed it. They've changed the virtual machine format so you have to convert them. The slowest part of this process was making a backup (my Windows Vista VM is over 100 gb).

Everything worked fine, and I should have left well enough alone, but during the upgrade process I noticed that my 30 gb virtual disk file was 80 gb. So I thought I'd try the Compressor tool. (I'd never used it before.)

I got this message:

Parallels Compressor is unable to compress the virtual disk files,

because the virtual machine has snapshots, or its disks are either

plain disks or undo disks. If you want to compress the virtual disk

file(s), delete all snapshots using Snapshot Manager and/or disable

undo disks in the Configuration Editor.

So I opened the Snapshot Manager and started deleting. I deleted the most recent one, but when I tried to delete the next one it froze. I waited a while, but nothing seemed to be happening and the first deletion had been quick. I ended up force quitting Parallels, although I hated doing this when my virtual machine was running since that's caused problems in the past.

But when I restarted Parallels it was still "stuck". Most of the menu options were grayed out. When I tried to quit I got:

Cannot close the virtual machine window.

The operation of deleting a virtual machine snapshot is currently in

progress. Wait until it is complete and try again.

I force quit Parallels again. I tried deleting the snapshot files but that didn't help. Force quit again.

I had, thankfully, backed up the upgraded vm before these problems. But it took an hour or more to copy the 100 gb to or from my Time Capsule. (That seems slow for a hardwired network connection, but I guess it is a lot of data.) So first I tried to restore just some of the smaller files, figuring the big disk images were probably ok. This didn't help.

Next I tried deleting the Parallels directory from my Library directory, thinking that might be where it had stored the fact that it was trying to delete a snapshot. This didn't help either.

So I bit the bullet and copied the entire vm from the backup. An hour later I start Parallels again, only to find nothing has changed - same problem. Where the heck is the problem?

The only other thing I can think of is the application itself so I start reinstalling. Part way through I get a message to please quit the Parallels virtual machine. But it's not running??? I look at the running processes and sure enough there's a Parallels process. Argh!

In hindsight, the message that "an operation" was "in progress" should have been enough of a clue. But I just assumed that force quitting the application would kill all of its processes. I'm not sure why it didn't. Maybe Parallels "detached" this process for some reason? I also jumped to the (incorrect) conclusion that there was a "flag" set somewhere that was making it think the operation was in progress.

If this had been on Windows, one of the first things I would have tried is rebooting, which would have fixed this. But I'm not used to having to do that on OS X. I probably didn't need to reboot this time either, killing the leftover process likely would have been sufficient. But just to be safe I did.

Sure enough, that solved the problem, albeit after wasting several hours. Once more, now that everything was functional, I should have left well enough alone, but I can be stubborn and I still had that oversize disk image.

This time I shut down the virtual machine before using the Snapshot Manager and I had no problems deleting the snapshots.

But when I restart the vm and run Compressor, I get exactly the same message. I have no snapshots, and "undo disks" is disabled. I'm not sure what "plain" disks are, but mine are "expandable" (which is actually a misleading name since they have a fixed maximum size) and the help says I should be able to compress expandable drives. I have no idea why it refuses to work.

While I'm in the help I see you can also compress drives with the Image Tools so I try that and finally I have success. My disk image file is now down to 30 gb. I'm not sure it was worth the stress though!

Everything worked fine, and I should have left well enough alone, but during the upgrade process I noticed that my 30 gb virtual disk file was 80 gb. So I thought I'd try the Compressor tool. (I'd never used it before.)

I got this message:

Parallels Compressor is unable to compress the virtual disk files,

because the virtual machine has snapshots, or its disks are either

plain disks or undo disks. If you want to compress the virtual disk

file(s), delete all snapshots using Snapshot Manager and/or disable

undo disks in the Configuration Editor.

So I opened the Snapshot Manager and started deleting. I deleted the most recent one, but when I tried to delete the next one it froze. I waited a while, but nothing seemed to be happening and the first deletion had been quick. I ended up force quitting Parallels, although I hated doing this when my virtual machine was running since that's caused problems in the past.

But when I restarted Parallels it was still "stuck". Most of the menu options were grayed out. When I tried to quit I got:

Cannot close the virtual machine window.

The operation of deleting a virtual machine snapshot is currently in

progress. Wait until it is complete and try again.

I force quit Parallels again. I tried deleting the snapshot files but that didn't help. Force quit again.

I had, thankfully, backed up the upgraded vm before these problems. But it took an hour or more to copy the 100 gb to or from my Time Capsule. (That seems slow for a hardwired network connection, but I guess it is a lot of data.) So first I tried to restore just some of the smaller files, figuring the big disk images were probably ok. This didn't help.

Next I tried deleting the Parallels directory from my Library directory, thinking that might be where it had stored the fact that it was trying to delete a snapshot. This didn't help either.

So I bit the bullet and copied the entire vm from the backup. An hour later I start Parallels again, only to find nothing has changed - same problem. Where the heck is the problem?

The only other thing I can think of is the application itself so I start reinstalling. Part way through I get a message to please quit the Parallels virtual machine. But it's not running??? I look at the running processes and sure enough there's a Parallels process. Argh!

In hindsight, the message that "an operation" was "in progress" should have been enough of a clue. But I just assumed that force quitting the application would kill all of its processes. I'm not sure why it didn't. Maybe Parallels "detached" this process for some reason? I also jumped to the (incorrect) conclusion that there was a "flag" set somewhere that was making it think the operation was in progress.

If this had been on Windows, one of the first things I would have tried is rebooting, which would have fixed this. But I'm not used to having to do that on OS X. I probably didn't need to reboot this time either, killing the leftover process likely would have been sufficient. But just to be safe I did.

Sure enough, that solved the problem, albeit after wasting several hours. Once more, now that everything was functional, I should have left well enough alone, but I can be stubborn and I still had that oversize disk image.

This time I shut down the virtual machine before using the Snapshot Manager and I had no problems deleting the snapshots.

But when I restart the vm and run Compressor, I get exactly the same message. I have no snapshots, and "undo disks" is disabled. I'm not sure what "plain" disks are, but mine are "expandable" (which is actually a misleading name since they have a fixed maximum size) and the help says I should be able to compress expandable drives. I have no idea why it refuses to work.

While I'm in the help I see you can also compress drives with the Image Tools so I try that and finally I have success. My disk image file is now down to 30 gb. I'm not sure it was worth the stress though!

Saturday, November 15, 2008

Library Software Sucks

Every so often I decide I should use the library more, instead of buying quite so many books. Since the books I want are almost always out or at another branch, I don't go to the library in person much. Instead, I use their web interface.

First, I guess I should be grateful / thankful that they have a web interface at all.

But it could be so much better!

I'm going to compare to Amazon, not because Amazon is necessarily perfect, but it's pretty good and most people are familiar with it.

Obviously, I'm talking about the web interface for my local library. There could be better systems out there, but I suspect most of them are just as bad.

Amazon has a Search box on every page. The library forces you to go to a separate search page. Although "Keyword Search" is probably what you want, it's on the right. On the left, where you naturally tend to go first, is "Exact Search". Except it's not exactly "exact", since they carefully put instructions on the screen to drop "the", "a", "an" from the beginning. This kind of thing drives me crazy. Why can't the software do that automatically? (It's like almost every site that takes a credit card number wants you to omit the spaces, even though it could do that trivially for you.) However, they don't tell you equally or more important tips like if you're searching for an author you have to enter last name first.

Amazon has a Search box on every page. The library forces you to go to a separate search page. Although "Keyword Search" is probably what you want, it's on the right. On the left, where you naturally tend to go first, is "Exact Search". Except it's not exactly "exact", since they carefully put instructions on the screen to drop "the", "a", "an" from the beginning. This kind of thing drives me crazy. Why can't the software do that automatically? (It's like almost every site that takes a credit card number wants you to omit the spaces, even though it could do that trivially for you.) However, they don't tell you equally or more important tips like if you're searching for an author you have to enter last name first.

Assuming you're paying attention enough to realize you want the keyword search, you now have to read the fine print:

Oops. I guess I was too slow. I'm not sure what session it's talking about since I didn't log in. When I click on "begin a new session" it takes me back to the Search screen, with my search gone, of course.

Oops. I guess I was too slow. I'm not sure what session it's talking about since I didn't log in. When I click on "begin a new session" it takes me back to the Search screen, with my search gone, of course.

Let's try "edward abbey" - 7 results including some about him or with prefaces by him.

How about "abbey, edward" - 5 results including one by "Carpenter, Edward" called "Westminster Abby". So much for exact phrase. Maybe the comma?

Try "abbey edward" - same results so I'm not sure what they mean by "exact phrase"

The search results themselves could be a lot nicer. No cover images. And nothing to make the title stand out. And the titles are all lower case. That may be how librarians treat them, but it's not how anyone else writes titles.

The search results themselves could be a lot nicer. No cover images. And nothing to make the title stand out. And the titles are all lower case. That may be how librarians treat them, but it's not how anyone else writes titles.

Oops, sat on the search results screen too long. At least this time it didn't talk about my session.

Oops, sat on the search results screen too long. At least this time it didn't talk about my session.

Back on the search results, there's a check box to add to "My Hitlist". When I first saw that I was excited. Then I read that the list disappears when my "session" ends. Since my "session" seems to get abrubtly ended fairly regularly, the hitlist doesn't appear too useful.

It would be really nice if you could have persistent "wish lists" like on Amazon.

Once you find a book you can reserve it. That's great and it's the whole reason I'm on here. But it presents you with a bit of a dilemma. If you reserve a bunch of books, they tend to arrive in bunches, and you can't read them all before they're due back. But if you only reserve one or two, then you could be waiting a month or two to get them.

Ideally, I'd like to see something like Rogers Video Direct, where I can add as many movies as I want to my "Zip List" (where do they come up with these names?) and they send me three at a time as they become available. When I return one then they send me another.

Notice the "Log Out" on the top right. This is a strange one since there's no "Log In". It seems to take you to the same screen as when your "session" times out. The only way to log in that I've found is to choose "My Account", which then opens a new window. This window doesn't have a Log Out link, instead it tells you to close the window when you're finished by clicking the "X" in the top right corner. Of course, I'm on a Max so my "X" is in the top left. But that's not a problem because if you stay in the My Account window too long (a minute or so) it closes itself.

Notice the "Log Out" on the top right. This is a strange one since there's no "Log In". It seems to take you to the same screen as when your "session" times out. The only way to log in that I've found is to choose "My Account", which then opens a new window. This window doesn't have a Log Out link, instead it tells you to close the window when you're finished by clicking the "X" in the top right corner. Of course, I'm on a Max so my "X" is in the top left. But that's not a problem because if you stay in the My Account window too long (a minute or so) it closes itself.

Of course, this assumes you didn't delay too long in clicking on My Account, because then you'll get:

Obviously, the lesson here is that you better not dither. But why? The reason the web scales is that once I've downloaded a page, I can sit and look at it as long as I like, without requiring any additional effort from the server. I'm not sure why this library system is so intent on getting rid of me. I could see if it was storing a bunch of session data for me that it might just be over aggressive about purging sessions. But I'm just browsing, it should be stateless as far as the server is concerned.

Obviously, the lesson here is that you better not dither. But why? The reason the web scales is that once I've downloaded a page, I can sit and look at it as long as I like, without requiring any additional effort from the server. I'm not sure why this library system is so intent on getting rid of me. I could see if it was storing a bunch of session data for me that it might just be over aggressive about purging sessions. But I'm just browsing, it should be stateless as far as the server is concerned.

And then there's the quality of the data itself. I'd show you some examples, but:

I've tried reporting errors in the data, like author's name duplicated or mis-spelled but there doesn't seem to be any process for users to report errors. It'd be nice if users could simply mark possible errors as they were browsing so library staff could clean them up.

I've tried reporting errors in the data, like author's name duplicated or mis-spelled but there doesn't seem to be any process for users to report errors. It'd be nice if users could simply mark possible errors as they were browsing so library staff could clean them up.

I could go on longer - what about new releases? what about suggestions based on my previous selections? what about a history of the books I've borrowed? what about reviews? Amazon has all these. But I'm sure by now you get the point.

I suspect the public web interface is an afterthought that didn't get much attention. And it's a case where the buyers (the libraries/librarians) aren't the end users (of the public web interface anyway). And since these are huge expensive systems there's large amounts of inertia. Even if something better did come along it would be an uphill battle to get libraries to switch.

And it's unlikely any complaints or suggestions from users even get back to the software developers. There are too many layers in between. I've tried to politely suggest the software could be better but all I get is a blank look and an offer to teach me how to use it. After all, I should be grateful, it's not that long ago we had to search a paper card catalog. I'm afraid at this rate it's not likely to improve very quickly.

The copyright at the bottom reads:

First, I guess I should be grateful / thankful that they have a web interface at all.

But it could be so much better!

I'm going to compare to Amazon, not because Amazon is necessarily perfect, but it's pretty good and most people are familiar with it.

Obviously, I'm talking about the web interface for my local library. There could be better systems out there, but I suspect most of them are just as bad.

Amazon has a Search box on every page. The library forces you to go to a separate search page. Although "Keyword Search" is probably what you want, it's on the right. On the left, where you naturally tend to go first, is "Exact Search". Except it's not exactly "exact", since they carefully put instructions on the screen to drop "the", "a", "an" from the beginning. This kind of thing drives me crazy. Why can't the software do that automatically? (It's like almost every site that takes a credit card number wants you to omit the spaces, even though it could do that trivially for you.) However, they don't tell you equally or more important tips like if you're searching for an author you have to enter last name first.

Amazon has a Search box on every page. The library forces you to go to a separate search page. Although "Keyword Search" is probably what you want, it's on the right. On the left, where you naturally tend to go first, is "Exact Search". Except it's not exactly "exact", since they carefully put instructions on the screen to drop "the", "a", "an" from the beginning. This kind of thing drives me crazy. Why can't the software do that automatically? (It's like almost every site that takes a credit card number wants you to omit the spaces, even though it could do that trivially for you.) However, they don't tell you equally or more important tips like if you're searching for an author you have to enter last name first.Assuming you're paying attention enough to realize you want the keyword search, you now have to read the fine print:

Searching by "Keyword" searches all indexed fields. Use the words and, or, and not to combine words to limit or broaden a search. If you enter more than one word without and, or, or not, then your keywords will be searched as an exact phrase.Since they don't tell you which fields are indexed the first sentence is useless. The last sentence is surprising. Why would you make the default searching by "exact phrase". If you wanted "exact" wouldn't you be using the exact search on the left?

Oops. I guess I was too slow. I'm not sure what session it's talking about since I didn't log in. When I click on "begin a new session" it takes me back to the Search screen, with my search gone, of course.

Oops. I guess I was too slow. I'm not sure what session it's talking about since I didn't log in. When I click on "begin a new session" it takes me back to the Search screen, with my search gone, of course.Let's try "edward abbey" - 7 results including some about him or with prefaces by him.

How about "abbey, edward" - 5 results including one by "Carpenter, Edward" called "Westminster Abby". So much for exact phrase. Maybe the comma?

Try "abbey edward" - same results so I'm not sure what they mean by "exact phrase"

The search results themselves could be a lot nicer. No cover images. And nothing to make the title stand out. And the titles are all lower case. That may be how librarians treat them, but it's not how anyone else writes titles.

The search results themselves could be a lot nicer. No cover images. And nothing to make the title stand out. And the titles are all lower case. That may be how librarians treat them, but it's not how anyone else writes titles. Oops, sat on the search results screen too long. At least this time it didn't talk about my session.

Oops, sat on the search results screen too long. At least this time it didn't talk about my session.Back on the search results, there's a check box to add to "My Hitlist". When I first saw that I was excited. Then I read that the list disappears when my "session" ends. Since my "session" seems to get abrubtly ended fairly regularly, the hitlist doesn't appear too useful.

It would be really nice if you could have persistent "wish lists" like on Amazon.

Once you find a book you can reserve it. That's great and it's the whole reason I'm on here. But it presents you with a bit of a dilemma. If you reserve a bunch of books, they tend to arrive in bunches, and you can't read them all before they're due back. But if you only reserve one or two, then you could be waiting a month or two to get them.

Ideally, I'd like to see something like Rogers Video Direct, where I can add as many movies as I want to my "Zip List" (where do they come up with these names?) and they send me three at a time as they become available. When I return one then they send me another.

Notice the "Log Out" on the top right. This is a strange one since there's no "Log In". It seems to take you to the same screen as when your "session" times out. The only way to log in that I've found is to choose "My Account", which then opens a new window. This window doesn't have a Log Out link, instead it tells you to close the window when you're finished by clicking the "X" in the top right corner. Of course, I'm on a Max so my "X" is in the top left. But that's not a problem because if you stay in the My Account window too long (a minute or so) it closes itself.

Notice the "Log Out" on the top right. This is a strange one since there's no "Log In". It seems to take you to the same screen as when your "session" times out. The only way to log in that I've found is to choose "My Account", which then opens a new window. This window doesn't have a Log Out link, instead it tells you to close the window when you're finished by clicking the "X" in the top right corner. Of course, I'm on a Max so my "X" is in the top left. But that's not a problem because if you stay in the My Account window too long (a minute or so) it closes itself.Of course, this assumes you didn't delay too long in clicking on My Account, because then you'll get:

Obviously, the lesson here is that you better not dither. But why? The reason the web scales is that once I've downloaded a page, I can sit and look at it as long as I like, without requiring any additional effort from the server. I'm not sure why this library system is so intent on getting rid of me. I could see if it was storing a bunch of session data for me that it might just be over aggressive about purging sessions. But I'm just browsing, it should be stateless as far as the server is concerned.

Obviously, the lesson here is that you better not dither. But why? The reason the web scales is that once I've downloaded a page, I can sit and look at it as long as I like, without requiring any additional effort from the server. I'm not sure why this library system is so intent on getting rid of me. I could see if it was storing a bunch of session data for me that it might just be over aggressive about purging sessions. But I'm just browsing, it should be stateless as far as the server is concerned.And then there's the quality of the data itself. I'd show you some examples, but:

I've tried reporting errors in the data, like author's name duplicated or mis-spelled but there doesn't seem to be any process for users to report errors. It'd be nice if users could simply mark possible errors as they were browsing so library staff could clean them up.

I've tried reporting errors in the data, like author's name duplicated or mis-spelled but there doesn't seem to be any process for users to report errors. It'd be nice if users could simply mark possible errors as they were browsing so library staff could clean them up.I could go on longer - what about new releases? what about suggestions based on my previous selections? what about a history of the books I've borrowed? what about reviews? Amazon has all these. But I'm sure by now you get the point.

I suspect the public web interface is an afterthought that didn't get much attention. And it's a case where the buyers (the libraries/librarians) aren't the end users (of the public web interface anyway). And since these are huge expensive systems there's large amounts of inertia. Even if something better did come along it would be an uphill battle to get libraries to switch.

And it's unlikely any complaints or suggestions from users even get back to the software developers. There are too many layers in between. I've tried to politely suggest the software could be better but all I get is a blank look and an offer to teach me how to use it. After all, I should be grateful, it's not that long ago we had to search a paper card catalog. I'm afraid at this rate it's not likely to improve very quickly.

The copyright at the bottom reads:

Copyright © Sirsi-Dynix. All rights reserved.

Version 2003.1.3 (Build 405.8)

I wonder if that means this version of the software is from 2003. Five years is a long time in the software business. Either the library isn't buying the updates, or Sirsi-Dynix is a tad behind on their development. But hey, their web site says "SirsiDynix is the global leader in strategic technology solutions for libraries". If so, technology solutions for libraries are in a pretty sad state.

Friday, November 14, 2008

Wikipedia on the iPhone and iPod Touch

I recently stumbled across an offline Wikipedia for the iPhone and iPod Touch. I don't have either, but Shelley has an iPod touch so I installed it there. It wasn't free but it was less than $10. You buy the app from the iTunes store and then when you run it for the first time it downloads the actual Wikipedia. It's 2gb so it takes a while. (and uses up space)

I had a copy of Wikipedia on my Palm and since I drifted away from using/carrying my Palm it's one of the few things I miss.

This version doesn't have any images, but otherwise it seems pretty good. The searching isn't the greatest, for example Elbrus and Mt Elbrus didn't find anything, but Mount Elbrus did.

I'm not sure exactly why I love having an encyclopedia at my fingertips. But there's something about having so much "knowledge" in your pocket. I'm just naturally curious I guess.

Despite trying to cut down on my gadget addiction, this adds another justification for buying an iPhone or iPod Touch. I hate the monthly fees and long term contracts with the iPhone, but it's definitely a more versatile gadget.

I had a copy of Wikipedia on my Palm and since I drifted away from using/carrying my Palm it's one of the few things I miss.

This version doesn't have any images, but otherwise it seems pretty good. The searching isn't the greatest, for example Elbrus and Mt Elbrus didn't find anything, but Mount Elbrus did.

I'm not sure exactly why I love having an encyclopedia at my fingertips. But there's something about having so much "knowledge" in your pocket. I'm just naturally curious I guess.

Despite trying to cut down on my gadget addiction, this adds another justification for buying an iPhone or iPod Touch. I hate the monthly fees and long term contracts with the iPhone, but it's definitely a more versatile gadget.

Wednesday, November 12, 2008

Lightroom and Lua