Another long tedious day working on getting more of our application tests to run on jSuneido.

These are large scale functional tests, so when one fails it's not easy to figure out why. It could take 5 minutes, it could take 5 hours.

I've found a couple of small bugs in jSuneido. One was because BigDecimal differentiates 1 from 1.0 from 1.00, which makes sense from a scientific precision viewpoint, but not when you're dealing with money. And the problem was actually even more obscure - it was because it differentiates 0 from .0 from .00

But the rest of the bugs (the majority) have been in our application code, either in the tests or in the code itself. Nothing serious, most of them were inadvertent dependencies on the order of unordered things.

But it's frustrating. It would be tedious enough doing all this testing to find bugs in jSuneido. But when I'm doing it to find other people's bugs it's annoying. And of course, as with any large body of code, a lot of it is confusing, hard to understand, and could be improved. (Don't get me wrong, I tend to think the same about my own code.)

Oh well, it's got to be done. Hopefully it doesn't take me too much longer.

Thursday, December 03, 2009

Wednesday, December 02, 2009

Systems that Never Stop

An interesting (and entertaining) talk by Joe Armstrong (the principal inventor of Erlang) about writing fault tolerant systems. Well worth watching.

InfoQ: Systems that Never Stop (and Erlang)

InfoQ: Systems that Never Stop (and Erlang)

Tuesday, December 01, 2009

A Debuggers Life

Another day of debugging, although with a twist - I found as many bugs in our application code as I did in jSuneido. Just minor stuff - there's nothing like multiple implementations of a language to flush out the edge cases.

It seems like a slow process, but the jSuneido bugs do seem to be getting smaller and more obscure, which gives me a certain amount of confidence that the main stuff is ok. Most stuff just works, which is a vast improvement over not long ago.

It seems like a slow process, but the jSuneido bugs do seem to be getting smaller and more obscure, which gives me a certain amount of confidence that the main stuff is ok. Most stuff just works, which is a vast improvement over not long ago.

Monday, November 30, 2009

Software That Fixes Itself

Technology Review: Software That Fixes Itself

Cool but a little scary - will the software start to evolve?

Cool but a little scary - will the software start to evolve?

Tuesday, November 24, 2009

jSuneido Socket Server

Up till now I've been using Ron Hitchens NIO socket server framework. It has worked pretty well, but it's primarily sample code. As far as I know it's not in production anywhere and not really maintained.

The first problem I ran into with it was that it didn't use gathering writes so it was susceptible to Nagle problems. I got around that with setTcpNoDelay, although that's not the ideal solution.

Another problem I ran into was that the input buffer was a fixed size. And worse, it would hang up in an infinite loop if it overflowed. To get around this I made the buffer big, but again, not an ideal solution.

And lastly, everything sent or received had to be copied in or out of buffers maintained by the framework. (rather than used directly)

So I decided to bite the bullet and write my own. It took me about half a day to write. It's roughly 180 lines of code. It's not as flexible as Ron's but it does what I need - gathering writes, unlimited input buffering, and the ability to use the buffers directly without copying. It's fairly easy to use - there's a simple echo server example at the end of the code. I wouldn't want to have to write it with just the Sun Java docs to go by, but with the examples in Ron's book, Java NIO, it's not too bad.

Of course, there may still be bugs in it, but it seems to work well so far.

The first problem I ran into with it was that it didn't use gathering writes so it was susceptible to Nagle problems. I got around that with setTcpNoDelay, although that's not the ideal solution.

Another problem I ran into was that the input buffer was a fixed size. And worse, it would hang up in an infinite loop if it overflowed. To get around this I made the buffer big, but again, not an ideal solution.

And lastly, everything sent or received had to be copied in or out of buffers maintained by the framework. (rather than used directly)

So I decided to bite the bullet and write my own. It took me about half a day to write. It's roughly 180 lines of code. It's not as flexible as Ron's but it does what I need - gathering writes, unlimited input buffering, and the ability to use the buffers directly without copying. It's fairly easy to use - there's a simple echo server example at the end of the code. I wouldn't want to have to write it with just the Sun Java docs to go by, but with the examples in Ron's book, Java NIO, it's not too bad.

Of course, there may still be bugs in it, but it seems to work well so far.

Thursday, November 19, 2009

jSuneido Back on Track

After my last post I spent a full day chasing my bug with very little progress. Around 7pm, just as I was winding down for the day I found a small clue. It didn't seem like much, but it was nice to end the day on any sort of positive note.

This morning, using the clue, I was able to find the problem. It didn't turn out to be a low level synchronization issue, it was a higher level logical error, although still related to concurrency. That explained the consistency in the error. I had missed one type of transaction conflict, and that meant under certain circumstances one transaction would overwrite another. The fix was easy (two lines of code) once I figured it out.

Even with the clue, it wasn't exactly easy to track down. I ended up digging through a 100,000 line log file. Luckily I wasn't just looking through it, I was searching for particular things. It was a matter of finding the particular 50 lines where the error happened. After that it was fairly obvious.

Since fixing the bug I've run millions of iterations of a variety of scenarios for as long as 30 minutes with no problems. This evening I'll let it run for a couple of hours. I'll also think up some additional testing scenarios - there are still a few things that I'm not exercising.

Cleaning up the code before sending it to version control I found an entire data structure (a hash map of transactions) that wasn't being used! I was carefully adding and removing from it, but I never actually used it. I must have at some point. So I removed it and everything worked the same. Humorous.

I don't want to be overly optimistic, I'm sure there are still bugs (there always are), but it's starting to feel like I'm over the worst of it.

This morning, using the clue, I was able to find the problem. It didn't turn out to be a low level synchronization issue, it was a higher level logical error, although still related to concurrency. That explained the consistency in the error. I had missed one type of transaction conflict, and that meant under certain circumstances one transaction would overwrite another. The fix was easy (two lines of code) once I figured it out.

Even with the clue, it wasn't exactly easy to track down. I ended up digging through a 100,000 line log file. Luckily I wasn't just looking through it, I was searching for particular things. It was a matter of finding the particular 50 lines where the error happened. After that it was fairly obvious.

Since fixing the bug I've run millions of iterations of a variety of scenarios for as long as 30 minutes with no problems. This evening I'll let it run for a couple of hours. I'll also think up some additional testing scenarios - there are still a few things that I'm not exercising.

Cleaning up the code before sending it to version control I found an entire data structure (a hash map of transactions) that wasn't being used! I was carefully adding and removing from it, but I never actually used it. I must have at some point. So I removed it and everything worked the same. Humorous.

I don't want to be overly optimistic, I'm sure there are still bugs (there always are), but it's starting to feel like I'm over the worst of it.

Wednesday, November 18, 2009

Offsite Sync and Backup

I have a large amount of music (~30gb) and photo files (~300gb). I back them up to my Time Capsule but that wouldn't protect me if my house burnt down. (Photo files from my Pentax K7 are 20mb each and I might take 10,000 in a year - that's 200gb added per year.)

So for an off-site backup, and so I can access them I keep a "mirror" copy on my computer at work. Currently, I update this mirror manually periodically, by copying new files to a portable hard drive and carrying that to work. But this is an awkward solution, and I don't update as often as I should.

There are a variety of backup and sync products out there, but none of them seem to handle this scenario.

I have been using Dropbox to sync my jSuneido files between home and work and laptop and it works really well. But their biggest account is 100gb.

Google's storage is getting cheaper, but Picasa won't let me store my big DNG (raw) photo files.

Jungle Disk has no limit storage, but at $.15 per gb that's roughly $50 per month, which isn't cheap.

Apart from the cost, the big problem with online storage is that uploading 300gb takes a long time. I signed up for Jungle Disk but it estimated 60 days to upload my files! Obviously, after that I'd only have to upload new files, but even a few thousand photos from a long holiday will take days or weeks to upload. Maybe I need a faster internet connection!

CrashPlan has a really interesting approach of letting you backup to other machines, either your own or your friends. This avoids the cost of storage. The upload speed may be better since the machines are local and aren't servicing other users. But CrashPlan doesn't sync, so I'd have an off-site backup, but I couldn't access the files (without restoring them). Another problem with CrashPlan is it requires both machines to be turned on at the same time. But to be environmentally friendly, I try to turn off my computers when I'm not using them.

Note: Jungle Disk only recently added sync and from their forum it sounds like it has problems.

Here is an idea for a new service.

I don't really need a copy of my files in the cloud. If I could sync between my home and work computers that would be sufficient. I don't really want to be paying $50 per month just to store my files in the cloud.

All I really need to store in the cloud is a "summary" of my files (e.g. file names, dates, sizes, maybe hashes) plus any new or modified files. Once the files have propagated to my computers they can be removed from the cloud. If you used a clever hash scheme you keep even do partial updates of large files. (Although for music and photos this isn't that important since the files don't usually change.)

This would require far less storage than keeping a complete copy in the cloud.

You'd still have the problem of the initial syncing. But that could either be done by a different method e.g. a portable hard drive like I've been using, or by requiring both computers to be running at the same time for the initial sync. This is similar to Amazon allowing you to send them physical media to load data into S3. And if you had a big addition of files (like the photos from a long holiday) you could use an alternate method to move them around, and the sync could recognize that you already had the same files on each computer.

The businesses that make money from selling storage probably wouldn't be crazy about this idea, but it seems like a natural addition to CrashPlan since they aren't charging for storage, and charging for the sync service would be additional revenue. And presumably it could be cheap since the storage and bandwidth needs are minimal. (The actual data would be transferred peer to peer.)

You could even borrow some ideas from Git - their "tree" of hash values would work well for this, and also provides security and error checking.

If I had some spare time it would be a fun project. If anyone out there wants to implement it, you can count me in as your first customer :-)

So for an off-site backup, and so I can access them I keep a "mirror" copy on my computer at work. Currently, I update this mirror manually periodically, by copying new files to a portable hard drive and carrying that to work. But this is an awkward solution, and I don't update as often as I should.

There are a variety of backup and sync products out there, but none of them seem to handle this scenario.

I have been using Dropbox to sync my jSuneido files between home and work and laptop and it works really well. But their biggest account is 100gb.

Google's storage is getting cheaper, but Picasa won't let me store my big DNG (raw) photo files.

Jungle Disk has no limit storage, but at $.15 per gb that's roughly $50 per month, which isn't cheap.

Apart from the cost, the big problem with online storage is that uploading 300gb takes a long time. I signed up for Jungle Disk but it estimated 60 days to upload my files! Obviously, after that I'd only have to upload new files, but even a few thousand photos from a long holiday will take days or weeks to upload. Maybe I need a faster internet connection!

CrashPlan has a really interesting approach of letting you backup to other machines, either your own or your friends. This avoids the cost of storage. The upload speed may be better since the machines are local and aren't servicing other users. But CrashPlan doesn't sync, so I'd have an off-site backup, but I couldn't access the files (without restoring them). Another problem with CrashPlan is it requires both machines to be turned on at the same time. But to be environmentally friendly, I try to turn off my computers when I'm not using them.

Note: Jungle Disk only recently added sync and from their forum it sounds like it has problems.

A Proposed Solution

Here is an idea for a new service.

I don't really need a copy of my files in the cloud. If I could sync between my home and work computers that would be sufficient. I don't really want to be paying $50 per month just to store my files in the cloud.

All I really need to store in the cloud is a "summary" of my files (e.g. file names, dates, sizes, maybe hashes) plus any new or modified files. Once the files have propagated to my computers they can be removed from the cloud. If you used a clever hash scheme you keep even do partial updates of large files. (Although for music and photos this isn't that important since the files don't usually change.)

This would require far less storage than keeping a complete copy in the cloud.

You'd still have the problem of the initial syncing. But that could either be done by a different method e.g. a portable hard drive like I've been using, or by requiring both computers to be running at the same time for the initial sync. This is similar to Amazon allowing you to send them physical media to load data into S3. And if you had a big addition of files (like the photos from a long holiday) you could use an alternate method to move them around, and the sync could recognize that you already had the same files on each computer.

The businesses that make money from selling storage probably wouldn't be crazy about this idea, but it seems like a natural addition to CrashPlan since they aren't charging for storage, and charging for the sync service would be additional revenue. And presumably it could be cheap since the storage and bandwidth needs are minimal. (The actual data would be transferred peer to peer.)

You could even borrow some ideas from Git - their "tree" of hash values would work well for this, and also provides security and error checking.

If I had some spare time it would be a fun project. If anyone out there wants to implement it, you can count me in as your first customer :-)

Immutable and Pure

More and more I find myself wanting a programming language where I could mark classes as immutable and functions as pure (no side-effects) and have this checked statically by the compiler. Being able to mark methods as read-only (like C++ const) would also be nice.

This is coming from a variety of sources:

- reading about functional languages like Haskell and Clojure

- working on concurrency in jSuneido (immutable classes and pure functions make concurrency easier)

- problems in my company's applications where side-effects have been added where they shouldn't

I have been using the javax annotation for Immutable, which in theory can be checked by programs like FindBugs and that's a step in the right direction.

There are a lot of new languages around these days, but so far I haven't seen any with these simple features. Of course, in a "true" functional language like Haskell, "everything" is pure and immutable (except for monads), so this doesn't really apply. But I think for the foreseeable future most of us are going to be using a mixture.

This is coming from a variety of sources:

- reading about functional languages like Haskell and Clojure

- working on concurrency in jSuneido (immutable classes and pure functions make concurrency easier)

- problems in my company's applications where side-effects have been added where they shouldn't

I have been using the javax annotation for Immutable, which in theory can be checked by programs like FindBugs and that's a step in the right direction.

There are a lot of new languages around these days, but so far I haven't seen any with these simple features. Of course, in a "true" functional language like Haskell, "everything" is pure and immutable (except for monads), so this doesn't really apply. But I think for the foreseeable future most of us are going to be using a mixture.

Tuesday, November 17, 2009

To Laugh or To Cry?

I sat down this morning to write more concurrency tests for jSuneido, fully expecting to uncover more bugs. Amazingly, everything worked perfectly. I have to admit I was feeling pretty darn good, I was almost ready to claim victory. But as the saying goes, pride goes before a fall.

It was time for coffee so I figured I might as well let the tests run for a longer period. I came back to find ... the exact same error I've been fighting for the last week or more! I wouldn't have been surprised to uncover different bugs, but I could have sworn I had squashed this one.

It's bizarre that I keep coming back to this exact same error. I would expect concurrency errors to be more random. Even for a single bug I would expect it to show a variety of symptoms. I guess I shouldn't be complaining, consistency is often helpful to debugging.

I've obviously reduced the frequency of occurrence of the error. I just hope I can get the error to occur in less than 10 minutes of testing. Otherwise it's going to be a very slow debug cycle and I'll have lots of time to review the code!

So am I laughing or crying? Mostly laughing at the moment, but ask me again after I've spent a bunch more hours (or, heaven forbid, days) struggling to find the problem.

It was time for coffee so I figured I might as well let the tests run for a longer period. I came back to find ... the exact same error I've been fighting for the last week or more! I wouldn't have been surprised to uncover different bugs, but I could have sworn I had squashed this one.

It's bizarre that I keep coming back to this exact same error. I would expect concurrency errors to be more random. Even for a single bug I would expect it to show a variety of symptoms. I guess I shouldn't be complaining, consistency is often helpful to debugging.

I've obviously reduced the frequency of occurrence of the error. I just hope I can get the error to occur in less than 10 minutes of testing. Otherwise it's going to be a very slow debug cycle and I'll have lots of time to review the code!

So am I laughing or crying? Mostly laughing at the moment, but ask me again after I've spent a bunch more hours (or, heaven forbid, days) struggling to find the problem.

Monday, November 16, 2009

How Can This Be Acceptable?

I recently downloaded the latest version of the Scite programming editor. And subsequently, every time I ran it I got Windows security warnings. There's a check box that implies it will let you stop these warnings, but as far as I can tell it has no effect. I have no idea why the previous version ran without any warnings.

I eventually got these instructions to work:

I eventually got these instructions to work:

1.. Right-click the file and select Properties.At my count, that's 7 levels of nested dialogs. And my name didn't show up in the list for step 12 so I had to Add "APM\andrew" (obviously, users would know to type that). Who designs this stuff? Who reviews it? Microsoft is supposed to hire all these really smart people, but they still seem to produce a lot of stupid stuff.

2.. Click on the Security tab.

3.. Click Advanced in the lower right.

4.. In the Advanced Security Settings window that pops up, click on the Owner tab.

5.. Click Edit.

6.. Click Other users or groups.

7.. Click Advanced in the lower left corner.

8.. Click Find Now.

9.. Scroll through the results and double-click on your current user account.

10.. Click OK to all of the remaining windows except the first Properties window.

11.. Select your user account from the list up top and click Edit.

12.. Select your user account from the list up top again and then in the pane below, check Full control under Allow, or as much control as you need.

13.. You'll get a security warning, click Yes.

14.. On some files that are essential to Windows, you'll get a "Unable to save permission changes. access is denied" warning and there's nothing that you can do about it to the best of my knowledge.

15.. Reconsider why you're using Windows.

Sunday, November 15, 2009

jSuneido Success

As I hoped, once I had a small failing test it didn't take too long to find the problem and fix it. It didn't make me feel too stupid (at least no more than the usual when you figure out a bug) since it was a fairly subtle synchronization issue. Have I ever mentioned that concurrency is hard?

The funny (in a sick way) part was that after all that, I still had the original problem. Ouch. Obviously, the problem I isolated and fixed wasn't the only one.

Pondering it more I realized that the bugs I'd been chasing were all originating from a certain aspect of the design. And I realized that even if I managed to chase them down and squash them, that it was still going to end up fragile. Some future modification was likely to end up with the same problem.

So I reversed course, deleted most of the code I wrote in the last few days, and took a simpler approach. Not quite as fast, but simplicity is worth a lot. It only took a half hour or so to make the changes.

Amazingly, all the tests now pass! It took me a minute to grasp that fact. What does that mean when there are no error messages? Oh yeah, that must mean it's working - that's weird.

I'll have to write a bunch more tests before I feel at all confident that it's functional, but this is definitely a step in the right direction. I feel a certain amount of reluctance to start writing more tests - I'd like to savor the feeling of success before I uncover a bunch more problems!

The funny (in a sick way) part was that after all that, I still had the original problem. Ouch. Obviously, the problem I isolated and fixed wasn't the only one.

Pondering it more I realized that the bugs I'd been chasing were all originating from a certain aspect of the design. And I realized that even if I managed to chase them down and squash them, that it was still going to end up fragile. Some future modification was likely to end up with the same problem.

So I reversed course, deleted most of the code I wrote in the last few days, and took a simpler approach. Not quite as fast, but simplicity is worth a lot. It only took a half hour or so to make the changes.

Amazingly, all the tests now pass! It took me a minute to grasp that fact. What does that mean when there are no error messages? Oh yeah, that must mean it's working - that's weird.

I'll have to write a bunch more tests before I feel at all confident that it's functional, but this is definitely a step in the right direction. I feel a certain amount of reluctance to start writing more tests - I'd like to savor the feeling of success before I uncover a bunch more problems!

The Joy of a Small Failing Test

Up till now I could only come up with small tests that succeeded and large scale tests that failed.

What I needed was a small test that failed. I finally have one. And even better, it actually fails in the debugger :-)

It's not so easy to come up with a small failing test because to do that you have to narrow down which part of the code is failing. Which is half the challenge, the other half is to figure out why it's failing.

At least now I feel like I have the beast cornered and it's only a matter of time before I kill it.

The test is simple enough that I look at it and think "this can't fail". But it is failing, so obviously I'm missing something. I just hope it's not something too obvious in hindsight because then I'll feel really stupid when I find it.

What I needed was a small test that failed. I finally have one. And even better, it actually fails in the debugger :-)

It's not so easy to come up with a small failing test because to do that you have to narrow down which part of the code is failing. Which is half the challenge, the other half is to figure out why it's failing.

At least now I feel like I have the beast cornered and it's only a matter of time before I kill it.

The test is simple enough that I look at it and think "this can't fail". But it is failing, so obviously I'm missing something. I just hope it's not something too obvious in hindsight because then I'll feel really stupid when I find it.

Saturday, November 14, 2009

jSuneido Progress

The good news is that I've fixed a number of bugs and came up with a reasonable (I think) solution for my design flaw. The solution involved the classic addition of indirection.[1] Of course, it's not the indirection that is the trick, it's how you use it.

The bad news is that after I'd done all this, I was still getting the original error! It only occurs about once every 200,000 transactions (with 2 threads). (Thank goodness for fast computers - 200,000 transaction only takes about 5 seconds.) Frustratingly, it doesn't happen in the debugger. With this kind of problem it's not much use adding print statements because you get way too much irrelevant output. A technique I've been finding useful is to have each transaction keep a log of what it's doing. Then when I get the error I can print the log from the offending transaction. It's not perfect because with concurrency problems you really need to see what the other thread was doing, but it's better than nothing.

It was also annoying because it was the end of the day so I had to leave it with a known error :-(

Thinking about it, I realized I had rushed coding some of the changes, hadn't really reviewed them, and hadn't written any tests. Not good. When I went back to it this morning, sure enough I had made mistakes in my rush job. Obviously, that self imposed pressure to get things resolved by the end of the day is not always a good thing.

So now I'll go back and review the code and write some tests before I worry about whether I've fixed the original problem.

The bad news is that after I'd done all this, I was still getting the original error! It only occurs about once every 200,000 transactions (with 2 threads). (Thank goodness for fast computers - 200,000 transaction only takes about 5 seconds.) Frustratingly, it doesn't happen in the debugger. With this kind of problem it's not much use adding print statements because you get way too much irrelevant output. A technique I've been finding useful is to have each transaction keep a log of what it's doing. Then when I get the error I can print the log from the offending transaction. It's not perfect because with concurrency problems you really need to see what the other thread was doing, but it's better than nothing.

It was also annoying because it was the end of the day so I had to leave it with a known error :-(

Thinking about it, I realized I had rushed coding some of the changes, hadn't really reviewed them, and hadn't written any tests. Not good. When I went back to it this morning, sure enough I had made mistakes in my rush job. Obviously, that self imposed pressure to get things resolved by the end of the day is not always a good thing.

So now I'll go back and review the code and write some tests before I worry about whether I've fixed the original problem.

1. A famous aphorism of David Wheeler goes: All problems in computer science can be solved by another level of indirection;. Kevlin Henney's corollary to this is, "...except for the problem of too many layers of indirection." - from Wikipedia

Wednesday, November 11, 2009

jSuneido Multi-Threading Issues

It didn't take much testing to find something that worked single-threaded but failed multi-threaded.

I was expecting this - I figured there'd be issues to work out.

But I was expecting them to be hard to track down and easy to fix and it turned out to be the opposite - easy to track down but hard to fix.

The problem turned out to be more a design flaw than a bug. I've thought of a few solutions but I'm not really happy with any of them.

Oh well, I knew all along this wasn't going to be easy. It'll come.

I was expecting this - I figured there'd be issues to work out.

But I was expecting them to be hard to track down and easy to fix and it turned out to be the opposite - easy to track down but hard to fix.

The problem turned out to be more a design flaw than a bug. I've thought of a few solutions but I'm not really happy with any of them.

Oh well, I knew all along this wasn't going to be easy. It'll come.

Monday, November 02, 2009

IntlliJ IDEA Goes Open Source

I recently learned that IntelliJ has released a free, open source community edition of their IDE.

IntelliJ is one of the main IDE's along with Eclipse and Netbeans. I hadn't looked at it much because the other two are free, but it does get some good reviews. (Apparently they did offer free licenses to open source projects but I wasn't aware of that.)

I tried downloading it and installing it and had no problems. It comes with Subversion support "out of the box" and I was easily able to check out my jSuneido project. That's more than I can say for Eclipse where it's still a painful experience to get Subversion working (at least on a Mac). IntelliJ proves that it is possible to do it smoothly.

I haven't had time to play with it much yet. My first impression was that the UI was a little "rougher" than Eclipse. I can probably tweak the fonts to get it a bit closer. Maybe it's due to Eclipse using SWT. (I'm not sure what IntelliJ is using.)

IntelliJ is known for their strong refactoring. To be honest, I only use a few basic refactorings in Eclipse (like rename and extract method) so I don't know if this would be a big benefit. I should probably use more...

IntelliJ is also supposed to have the best Scala plugin. I'll have to try it. I tried the Eclipse one but wasn't too impressed with where it's at so far.

IntelliJ is one of the main IDE's along with Eclipse and Netbeans. I hadn't looked at it much because the other two are free, but it does get some good reviews. (Apparently they did offer free licenses to open source projects but I wasn't aware of that.)

I tried downloading it and installing it and had no problems. It comes with Subversion support "out of the box" and I was easily able to check out my jSuneido project. That's more than I can say for Eclipse where it's still a painful experience to get Subversion working (at least on a Mac). IntelliJ proves that it is possible to do it smoothly.

I haven't had time to play with it much yet. My first impression was that the UI was a little "rougher" than Eclipse. I can probably tweak the fonts to get it a bit closer. Maybe it's due to Eclipse using SWT. (I'm not sure what IntelliJ is using.)

IntelliJ is known for their strong refactoring. To be honest, I only use a few basic refactorings in Eclipse (like rename and extract method) so I don't know if this would be a big benefit. I should probably use more...

IntelliJ is also supposed to have the best Scala plugin. I'll have to try it. I tried the Eclipse one but wasn't too impressed with where it's at so far.

Friday, October 30, 2009

jSuneido Progress

Over the last couple of weeks I've been working on database concurrency in jSuneido. I basically ripped out all the transaction handling code that I ported from cSuneido and replaced it with a more concurrency friendly design.

The tricky part was keeping the code running (tests passing) during the major surgery. It's always tempting to just start rewriting and hope when you finish that it'll all work. Of course, it never does and you're then faced with major debugging because you have no idea what's broken or which part of your changes broke it.

Evolving gradually isn't so easy either, but at least there's no big-bang integration nightmare to face at the end. As you evolve the code some of the intermediate stages aren't pretty since you have two designs cohabiting. And that can lead to some ugly bugs due to the ugly code. But if you're making the changes in small steps, you can always just back up to a working state.

A few days it was touch and go whether I'd get that day's changes working by the end of the day but thankfully I always did. I always hate leaving things broken at the end of the day!

There were a few uneasy moments when things weren't working and I started to wonder if there was some flaw in the design that would negate the whole approach. But the bugs always turned out to be relatively minor stuff. (Like transactions conflicting with themselves - oops!)

The new design doesn't write any changes to the database file till a transaction commits. This eliminated code that had to determine if data should be visible to a given transaction, and code that had to undo changes when the transaction aborted and rolled back. It does mean accumulating more data in memory, but memory is cheap these days. And simpler code is worth a lot.

Switching to serializable snapshot isolation, as described in Serializable Isolation for Snapshot Databases turned out pretty well. You still need to track reads and writes, but the advantage is that conflicts are detected during operations, rather than having to do a slow read validation process during commit (Especially since, at least in my design, commit is single threaded.) It was also nice to see that this approach is a bit more permissive than my previous design i.e. allows more concurrency.

It was exciting (in a geek way) to finally get to the whole point of this exercise - making Suneido multi-threaded. It's taken so much work to get to this point that you almost forget why you're doing it.

I've also gradually been making things thread-safe where needed. My approach to concurrency has been:

The real test will be how scalable jSuneido is over multiple cores. My gut feeling is that the design should scale fairly well, but I'm not sure gut feelings are reliable in this area.

It's a funny feeling to be finally approaching the end of this project. There's still lots to do, but the big stuff is handled.

The tricky part was keeping the code running (tests passing) during the major surgery. It's always tempting to just start rewriting and hope when you finish that it'll all work. Of course, it never does and you're then faced with major debugging because you have no idea what's broken or which part of your changes broke it.

Evolving gradually isn't so easy either, but at least there's no big-bang integration nightmare to face at the end. As you evolve the code some of the intermediate stages aren't pretty since you have two designs cohabiting. And that can lead to some ugly bugs due to the ugly code. But if you're making the changes in small steps, you can always just back up to a working state.

A few days it was touch and go whether I'd get that day's changes working by the end of the day but thankfully I always did. I always hate leaving things broken at the end of the day!

There were a few uneasy moments when things weren't working and I started to wonder if there was some flaw in the design that would negate the whole approach. But the bugs always turned out to be relatively minor stuff. (Like transactions conflicting with themselves - oops!)

The new design doesn't write any changes to the database file till a transaction commits. This eliminated code that had to determine if data should be visible to a given transaction, and code that had to undo changes when the transaction aborted and rolled back. It does mean accumulating more data in memory, but memory is cheap these days. And simpler code is worth a lot.

Switching to serializable snapshot isolation, as described in Serializable Isolation for Snapshot Databases turned out pretty well. You still need to track reads and writes, but the advantage is that conflicts are detected during operations, rather than having to do a slow read validation process during commit (Especially since, at least in my design, commit is single threaded.) It was also nice to see that this approach is a bit more permissive than my previous design i.e. allows more concurrency.

It was exciting (in a geek way) to finally get to the whole point of this exercise - making Suneido multi-threaded. It's taken so much work to get to this point that you almost forget why you're doing it.

I've also gradually been making things thread-safe where needed. My approach to concurrency has been:

- keep as much data thread contained as possible, i.e. minimize shared data

- immutable data where possible

- persistent immutable data structures for multi-version (the equivalent of database snapshot isolation)

- concurrent data structures where applicable e.g. ConcurrentHashMap

- Java synchronized only for bottom level code that doesn't call any other application code (to avoid the possibility of deadlock and livelock)

The real test will be how scalable jSuneido is over multiple cores. My gut feeling is that the design should scale fairly well, but I'm not sure gut feelings are reliable in this area.

It's a funny feeling to be finally approaching the end of this project. There's still lots to do, but the big stuff is handled.

Tuesday, October 27, 2009

The First Mistake in Documentation

From the Java 6 docs for Buffer:

I don't know how many times I've seen stuff like this. Why do people write this kind of thing? They must know it's useless. Is it just because they are "required" to write documentation so they fulfill the letter of their requirements? Maybe IDE's are too good at generating boilerplate which then gets left as generated.

Comments like "x = x + 1 // add one to x" are a similar phenomenon

capacity

public final int capacity()

- Returns this buffer's capacity.

-

- Returns:

- The capacity of this buffer

I don't know how many times I've seen stuff like this. Why do people write this kind of thing? They must know it's useless. Is it just because they are "required" to write documentation so they fulfill the letter of their requirements? Maybe IDE's are too good at generating boilerplate which then gets left as generated.

Comments like "x = x + 1 // add one to x" are a similar phenomenon

Saturday, October 24, 2009

When Will I Learn

I had an annoying intermittent bug in jSuneido that I narrowed down to a problem with my PersistentMap. I eventually tracked it down by writing a random "stress" test.

The "funny" part is that I knew I should have done this right from the start. In my previous blog post I said:

Here's the fixed source code. The bug was missing "<< h" on line 202

The "funny" part is that I knew I should have done this right from the start. In my previous blog post I said:

I should probably do some random stress testing to exercise it more.As is often the case, the fix was tiny - 3 characters! And, of course, it was a situation that was relatively rare. It was a good example that 100% test coverage does not mean no bugs. This kind of thing is one of the downsides of rolling your own.

Here's the fixed source code. The bug was missing "<< h" on line 202

Friday, October 23, 2009

Give Them Something Better

This quote was talking about movie theaters, but it could easily be talking about software.

"Giving the people what they want is fundamentally and disastrously wrong. The people don't know what they want ... [Give] them something better" - Samuel "Roxy" Rothapfel, 1914 (quoted in Blue Ocean Strategy)Of course, the danger is going too far to the other extreme, thinking you know better, and ignoring peoples real needs.

Thursday, October 22, 2009

Eclipse Annoyance

Have I mentioned that the auto-indent/wrapping in Eclipse really sucks!

I know formatting is somewhat subjective, but I can't see how this kind of result can be called "correct" under any interpretation. The frustrating part is that you fix it, and next time you turn around, it's mangled again.

I cannot understand how the Eclipse developers tolerate this. Surely somewhat would get annoyed enough to fix it. The only thing I can guess is that they don't use it. I wonder why. Maybe I'm the idiot for leaving it turned on!

To be fair, maybe it works for everyone else and it's something specific to my settings that messes it up. If so, I haven't been able to find the magic combination of settings that "works".

insertExistingRecords(tran, columns, table, colnums,

fktablename,

fktable, fkcolumns, btreeIndex);

fktablename,

fktable, fkcolumns, btreeIndex);

I know formatting is somewhat subjective, but I can't see how this kind of result can be called "correct" under any interpretation. The frustrating part is that you fix it, and next time you turn around, it's mangled again.

I cannot understand how the Eclipse developers tolerate this. Surely somewhat would get annoyed enough to fix it. The only thing I can guess is that they don't use it. I wonder why. Maybe I'm the idiot for leaving it turned on!

To be fair, maybe it works for everyone else and it's something specific to my settings that messes it up. If so, I haven't been able to find the magic combination of settings that "works".

Tuesday, October 20, 2009

Another Unavailable eBook Reader

Barnes & Noble has announced their eBook reader. It looks pretty nifty, but of course, it's not available in Canada. (Only US currently.)

Nook, eBook Reader, eBook Device - Barnes & Noble

There are some interesting features like being able to loan books to other people, and being able to read your ebooks on your computer.

So far, the Sony eBook Readers are the only mainstream reader available in Canada.

The Sony eBook store claims about 55,000 books versus Amazon's 350,000. Barnes & Noble claims "over one million" but they must be counting all the public domain stuff. I'd be surprised if they have as good a selection as Amazon.

I wonder if you'd get away with shipping a Nook to a friend's US address. The question is whether they'll let you use a Canadian credit card to pay for books (Amazon doesn't).

Nook, eBook Reader, eBook Device - Barnes & Noble

There are some interesting features like being able to loan books to other people, and being able to read your ebooks on your computer.

So far, the Sony eBook Readers are the only mainstream reader available in Canada.

The Sony eBook store claims about 55,000 books versus Amazon's 350,000. Barnes & Noble claims "over one million" but they must be counting all the public domain stuff. I'd be surprised if they have as good a selection as Amazon.

I wonder if you'd get away with shipping a Nook to a friend's US address. The question is whether they'll let you use a Canadian credit card to pay for books (Amazon doesn't).

Monday, October 19, 2009

Back to No Kindle in Canada

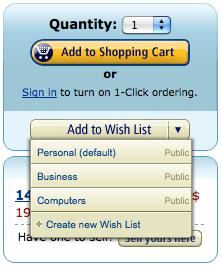

I just tried to order the new international version of the Kindle and got:

When the announcement first came out I specifically went and checked Canada and I'd swear it said it was available. (my previous post)

It really annoys me to continually run into products and services that exclude Canada. I don't think Amazon has anything personal against Canada, so I have to assume it's our industry and government that are blocking it. Stupid and short sighted.

Canada snubbed as Kindle goes global - The Globe and Mail

When the announcement first came out I specifically went and checked Canada and I'd swear it said it was available. (my previous post)

It really annoys me to continually run into products and services that exclude Canada. I don't think Amazon has anything personal against Canada, so I have to assume it's our industry and government that are blocking it. Stupid and short sighted.

Canada snubbed as Kindle goes global - The Globe and Mail

Wednesday, October 14, 2009

A Java Immutable Persistent List

Something else I needed for jSuneido was an immutable PersistentList (source). I also improved my PersistentMap (source).

One of the things that was bothering me was that I thought there should be a more efficient way to initially build immutable persistent lists and maps. Creating a new version for every element added seemed like overkill.

When I started looking at the Google Collections Library I found the Builder classes they have for their immutable collections. This seemed like a good approach so I added builders to PersistentList and PersistentMap. (The Guava: Google Core Libraries for Java 1.6 also look interesting.)

To be clear, if you just need a thread safe map or list, the java.util.concurrent ones are just fine. I'm using them in a few places.

And if you just need an immutable collection java.util collections offer the unModifiable wrappers and the Google Collections Library has immutable collections. However, they are not "persistent" (see Language Connoisseur). They don't offer any easy way to create new versions with added or removed elements.

Persistent immutable data structures really shine if you need to make modified versions but still retain full access to previous versions. That's what I need in the jSuneido database code because it implements multi-version concurrency control. Each transactions sees the database "as of" a certain point in time. Persistent immutable data structures are a great fit for this.

One of the things that was bothering me was that I thought there should be a more efficient way to initially build immutable persistent lists and maps. Creating a new version for every element added seemed like overkill.

When I started looking at the Google Collections Library I found the Builder classes they have for their immutable collections. This seemed like a good approach so I added builders to PersistentList and PersistentMap. (The Guava: Google Core Libraries for Java 1.6 also look interesting.)

To be clear, if you just need a thread safe map or list, the java.util.concurrent ones are just fine. I'm using them in a few places.

And if you just need an immutable collection java.util collections offer the unModifiable wrappers and the Google Collections Library has immutable collections. However, they are not "persistent" (see Language Connoisseur). They don't offer any easy way to create new versions with added or removed elements.

Persistent immutable data structures really shine if you need to make modified versions but still retain full access to previous versions. That's what I need in the jSuneido database code because it implements multi-version concurrency control. Each transactions sees the database "as of" a certain point in time. Persistent immutable data structures are a great fit for this.

Java ByteBuffer Annoyances

jSuneido uses ByteBuffer's to memory map the database file, but they're not ideal for this. Unfortunately, they're the only option.

Java NIO Buffer's are meant to be used in a very particular way with methods like mark, reset, flip, clear, and rewind. For the purpose they are designed for, I'm sure they work well.

But I'm not sure that shoehorning the memory mapping interface into ByteBuffer was the best design choice.

jSuneido memory maps the database file in big chunks (e.g. 4mb). When you want access to a particular record it returns a new ByteBuffer that is a "slice" of the memory map one. This is the closest I can get to cSuneido returning a pointer. The problem is that slice doesn't take any arguments - you have to set the position and limit of the buffer first. Which means the operation isn't thread safe because a concurrent operation could also be modifying the position and limit.

Most operations on buffers have both "relative" (using the current position) and "absolute" (passing the position as an argument) versions. For some reason slice doesn't have an absolute version.

Another operation that is missing an absolute version is get(byte[]) so again you have to mess with the buffer's current position, meaning concurrency issues.

It would have been much better (IMO) if ByteBuffer was immutable (i.e. all absolute operations). All the position/mark/limit reset/clear/flip stuff should have been in a separate class that wrapped the base immutable version. But not only does the current design force you to drag along a bunch of extra baggage, but it also (for some unknown reason) omits key operations that would let you use it as immutable.

Considering that one of the goals of java.nio was concurrency, it seems strange that they picked a design that is so unfriendly to it.

Java NIO Buffer's are meant to be used in a very particular way with methods like mark, reset, flip, clear, and rewind. For the purpose they are designed for, I'm sure they work well.

But I'm not sure that shoehorning the memory mapping interface into ByteBuffer was the best design choice.

jSuneido memory maps the database file in big chunks (e.g. 4mb). When you want access to a particular record it returns a new ByteBuffer that is a "slice" of the memory map one. This is the closest I can get to cSuneido returning a pointer. The problem is that slice doesn't take any arguments - you have to set the position and limit of the buffer first. Which means the operation isn't thread safe because a concurrent operation could also be modifying the position and limit.

Most operations on buffers have both "relative" (using the current position) and "absolute" (passing the position as an argument) versions. For some reason slice doesn't have an absolute version.

Another operation that is missing an absolute version is get(byte[]) so again you have to mess with the buffer's current position, meaning concurrency issues.

It would have been much better (IMO) if ByteBuffer was immutable (i.e. all absolute operations). All the position/mark/limit reset/clear/flip stuff should have been in a separate class that wrapped the base immutable version. But not only does the current design force you to drag along a bunch of extra baggage, but it also (for some unknown reason) omits key operations that would let you use it as immutable.

Considering that one of the goals of java.nio was concurrency, it seems strange that they picked a design that is so unfriendly to it.

Monday, October 12, 2009

jSuneido Performance

I was troubled by jSuneido appearing to be 10 times slower than cSuneido so I did a little digging.

The good news is that with a few minor adjustments it's now only about 50% slower. That takes a load off my mind.

The adjustments included:

- using the server VM (adding -server to the JRE options)

- disabling asserts (I had some slow ones for debugging)

- removing dynamic loading of classes (if a global name wasn't found it would try to class load it)

- recording when a library lookup fails to avoid doing it again

The C++ Suneido code makes a point of avoiding copying data as it moves from the database to the language. For example, a string that comes from a database field actually points to the memory mapped database - zero copying. Of course, I never knew how much benefit this was because I had nothing to compare it to. It just seemed like it had to be beneficial.

In Java it's a lot harder to avoid copying. To get a string from the database, not only do you have to copy it twice (from a ByteBuffer to a byte array and then to a String) but you also have to decode it using a character set. I could avoid the decoding by storing strings as Java chars (16 bit) but that would double the size in the database.

There may be better ways to implement this, I'll have to do some research. Another option would be to make the conversion lazy i.e. don't convert from ByteBuffer to String until the string is needed. Since a certain percentage of fields are never accessed, this would eliminate unnecessary work.

One small benchmark I ran (admittedly not representative of real code) spent 45% of its time in java.nio.Bits.copyToByteArray! Yikes, almost half the run time! Judging by this, my efforts to avoid copying in cSuneido were probably worthwhile.

A strange result from this same benchmark was that 25% of the time was in String.intern. I found this hard to believe but it seemed consistent. I even tried the YourKit profiler and got the same result. (Although if it is using the same low level data collection that would explain it.) But if I did a microbenchmark on String.intern alone it was very fast, as I would expect. (I checked the profiler output to make sure the intern wasn't getting optimized away.)

I don't know if this is an artifact of the profiling, or if intern slows down when there are a lot of interned strings, or if it slows down due to some interaction with the other code. I did find some reports of intern related performance problems but nothing definitive.

jSuneido currently interns method name strings so that it can dispatch using == (compare reference/pointer) instead of equals (compare contents). Most cases e.g. object.method() are interned at compile time. (Java always interns string literals.) But some cases e.g. object[method]() are still interned at run time.

On larger, more realistic tests intern doesn't show up so I'm not going to worry about it for now.

The good news is that with a few minor adjustments it's now only about 50% slower. That takes a load off my mind.

The adjustments included:

- using the server VM (adding -server to the JRE options)

- disabling asserts (I had some slow ones for debugging)

- removing dynamic loading of classes (if a global name wasn't found it would try to class load it)

- recording when a library lookup fails to avoid doing it again

The C++ Suneido code makes a point of avoiding copying data as it moves from the database to the language. For example, a string that comes from a database field actually points to the memory mapped database - zero copying. Of course, I never knew how much benefit this was because I had nothing to compare it to. It just seemed like it had to be beneficial.

In Java it's a lot harder to avoid copying. To get a string from the database, not only do you have to copy it twice (from a ByteBuffer to a byte array and then to a String) but you also have to decode it using a character set. I could avoid the decoding by storing strings as Java chars (16 bit) but that would double the size in the database.

There may be better ways to implement this, I'll have to do some research. Another option would be to make the conversion lazy i.e. don't convert from ByteBuffer to String until the string is needed. Since a certain percentage of fields are never accessed, this would eliminate unnecessary work.

One small benchmark I ran (admittedly not representative of real code) spent 45% of its time in java.nio.Bits.copyToByteArray! Yikes, almost half the run time! Judging by this, my efforts to avoid copying in cSuneido were probably worthwhile.

A strange result from this same benchmark was that 25% of the time was in String.intern. I found this hard to believe but it seemed consistent. I even tried the YourKit profiler and got the same result. (Although if it is using the same low level data collection that would explain it.) But if I did a microbenchmark on String.intern alone it was very fast, as I would expect. (I checked the profiler output to make sure the intern wasn't getting optimized away.)

I don't know if this is an artifact of the profiling, or if intern slows down when there are a lot of interned strings, or if it slows down due to some interaction with the other code. I did find some reports of intern related performance problems but nothing definitive.

jSuneido currently interns method name strings so that it can dispatch using == (compare reference/pointer) instead of equals (compare contents). Most cases e.g. object.method() are interned at compile time. (Java always interns string literals.) But some cases e.g. object[method]() are still interned at run time.

On larger, more realistic tests intern doesn't show up so I'm not going to worry about it for now.

Amazon Kindle International

It looks like we'll be able to get the Kindle in Canada soon.

Amazon.com: Kindle Wireless Reading Device (6" Display, U.S. & International Wireless, Latest Generation): Kindle Store

I've been getting close to buying a Sony reader, now I'll have to choose:

Sony

- touch screen

- more open formats e.g. ePub

- access to public domain Google Books

- access to some public libraries

Kindle

- bigger selection of books

- free 3G wireless

- access to Wikipedia

- purchased books can also be accessed on iPhone or iPod Touch

So far it's only the smaller Kindle that's going to be available internationally.

One thing it would be good for is computer books. I hate buying them because I know they'll be out of date within a year or two. But you can't get most of them from the library, and even if you could, often I need to refer back to them for longer than I could borrow them from the library. And it would be great to not have to haul physical books back and forth between home and office. Environmentally, you save trees, but you consume another gadget, which wouldn't be so bad if it lasted longer, but you know it'll be out of date in a year or two.

Amazon.com: Kindle Wireless Reading Device (6" Display, U.S. & International Wireless, Latest Generation): Kindle Store

I've been getting close to buying a Sony reader, now I'll have to choose:

Sony

- touch screen

- more open formats e.g. ePub

- access to public domain Google Books

- access to some public libraries

Kindle

- bigger selection of books

- free 3G wireless

- access to Wikipedia

- purchased books can also be accessed on iPhone or iPod Touch

So far it's only the smaller Kindle that's going to be available internationally.

One thing it would be good for is computer books. I hate buying them because I know they'll be out of date within a year or two. But you can't get most of them from the library, and even if you could, often I need to refer back to them for longer than I could borrow them from the library. And it would be great to not have to haul physical books back and forth between home and office. Environmentally, you save trees, but you consume another gadget, which wouldn't be so bad if it lasted longer, but you know it'll be out of date in a year or two.

Quick and Dirty Java Profiling

Search for "Java Eclipse profiler" and one of the first things you'll find is The Eclipse Test & Performance Tools Platform. I installed it, but when I went to use it, it told me my platform wasn't supported. I'm not sure what that meant - 64 bit? OS X? Java 1.6? Eclipse 3.4? I didn't pursue it, life's too short.

I looked at a few more tools but nothing stood out. There's the YourKit profiler but it's a commercial product. (Although maybe free for open source projects?) Eventually I found an article on using the built-in hprof. That sounded simple enough.

To use hprof you add something like -agentlib:hprof=cpu=samples to your Java command line. In Eclipse you can do this from Eclipse > Preferences > Java > Installed JREs > Edit. It's crude to edit the preferences to turn this on and off, but ok for limited use. (There may be a better way to do this, I'm still far from an Eclipse expert.)

This will produce a java.hprof.txt file with the results. It's fairly self explanatory.

There are more options for hprof (you can get a list with -agentlib:hprof=help)

I looked at a few more tools but nothing stood out. There's the YourKit profiler but it's a commercial product. (Although maybe free for open source projects?) Eventually I found an article on using the built-in hprof. That sounded simple enough.

To use hprof you add something like -agentlib:hprof=cpu=samples to your Java command line. In Eclipse you can do this from Eclipse > Preferences > Java > Installed JREs > Edit. It's crude to edit the preferences to turn this on and off, but ok for limited use. (There may be a better way to do this, I'm still far from an Eclipse expert.)

This will produce a java.hprof.txt file with the results. It's fairly self explanatory.

There are more options for hprof (you can get a list with -agentlib:hprof=help)

Sunday, October 11, 2009

jSuneido Passes Accounting Tests

Another good milestone - jSuneido now successfully runs all the tests from our accounting application.

The further I go, the tougher the bugs tend to get. One of them took me two days to track down and fix. The problems were in predictable areas like obscure details of database rules and math. Some of them were not so much bugs as just small incompatibilities with cSuneido. A few were errors in the tests themselves.

I've got more application tests I can run. Hopefully they won't uncover too many more problems.

On a less positive note, jSuneido is taking almost 10 times longer than cSuneido to run the accounting tests. That's a significant difference. I haven't done any optimizing yet, but I wouldn't expect optimizing to make a 10 times difference. It may be time to find a profiler and see what's taking all the time.

The stdlib tests run in similar amounts of time on cSuneido and jSuneido so I suspect the difference is in the database - the accounting tests use the database a lot more than the stdlib tests. cSuneido has a thinner, more direct interface to the memory mapping, which may make a fair difference in speed. I should be able to write some tests to see if this is the problem. Of course, if it is, I'm not sure how I'm going to fix it ...

The further I go, the tougher the bugs tend to get. One of them took me two days to track down and fix. The problems were in predictable areas like obscure details of database rules and math. Some of them were not so much bugs as just small incompatibilities with cSuneido. A few were errors in the tests themselves.

I've got more application tests I can run. Hopefully they won't uncover too many more problems.

On a less positive note, jSuneido is taking almost 10 times longer than cSuneido to run the accounting tests. That's a significant difference. I haven't done any optimizing yet, but I wouldn't expect optimizing to make a 10 times difference. It may be time to find a profiler and see what's taking all the time.

The stdlib tests run in similar amounts of time on cSuneido and jSuneido so I suspect the difference is in the database - the accounting tests use the database a lot more than the stdlib tests. cSuneido has a thinner, more direct interface to the memory mapping, which may make a fair difference in speed. I should be able to write some tests to see if this is the problem. Of course, if it is, I'm not sure how I'm going to fix it ...

Thursday, October 08, 2009

A Java Immutable Persistent Map

I know this is going to seem like I'm reinventing the wheel again, but I honestly tried to find existing Java code for a persistent map. I also took a stab at extracting something usable from Clojure but it was not easy to untangle from the rest of the Clojure code. I does seem surprising that there isn't anything out there (at least easily findable). I guess Java programmers don't write functional code.

It took me most of a day and about 300 lines of code to implement, using the Ideal Hash Trees paper and the occasional glance at the Clojure code to see how it did things. I took a similar approach as Clojure but simplified somewhat. I also made mine compatible with the Java collections classes. And mine doesn't depend on anything other than the standard Java libraries, so it should be a useful starting point for anyone else.

I started implementing iteration but gave up for lack of time. (And YAGNI - I may not need it.) Iterating through trees is always a pain.

The code is not as clean as I'd like, e.g. the methods could be smaller, but I did write tests with pretty much 100% coverage. I should probably do some random stress testing to exercise it more. And there are some magic numbers in there like 0x1f and 5 and 32.

I didn't find it right away so I'll point out that if you're looking for CTPOP (count population) in Java it's Integer.bitCount

I tried to restrain myself from premature optimization and took the simplest approach I could. I'm sure it could be made to use less memory and run faster. But the algorithm is good, so speed should be reasonable. And it will certainly use less memory than naive copy on write.

It was actually a nice break from slogging away getting our accounting application tests to run on jSuneido.

PS. I realize that Git uses a persistent data structure, which is why it is so "cheap" to make new versions. I started implementing a Git-like system in Suneido a while ago, but at that point I hadn't run into persistent data structures. But tree data structures are not strangers anyway due to Suneido's btree database indexes.

It took me most of a day and about 300 lines of code to implement, using the Ideal Hash Trees paper and the occasional glance at the Clojure code to see how it did things. I took a similar approach as Clojure but simplified somewhat. I also made mine compatible with the Java collections classes. And mine doesn't depend on anything other than the standard Java libraries, so it should be a useful starting point for anyone else.

I started implementing iteration but gave up for lack of time. (And YAGNI - I may not need it.) Iterating through trees is always a pain.

The code is not as clean as I'd like, e.g. the methods could be smaller, but I did write tests with pretty much 100% coverage. I should probably do some random stress testing to exercise it more. And there are some magic numbers in there like 0x1f and 5 and 32.

I didn't find it right away so I'll point out that if you're looking for CTPOP (count population) in Java it's Integer.bitCount

I tried to restrain myself from premature optimization and took the simplest approach I could. I'm sure it could be made to use less memory and run faster. But the algorithm is good, so speed should be reasonable. And it will certainly use less memory than naive copy on write.

It was actually a nice break from slogging away getting our accounting application tests to run on jSuneido.

PS. I realize that Git uses a persistent data structure, which is why it is so "cheap" to make new versions. I started implementing a Git-like system in Suneido a while ago, but at that point I hadn't run into persistent data structures. But tree data structures are not strangers anyway due to Suneido's btree database indexes.

Monday, October 05, 2009

Building the Perfect Beast

I just watched a good presentation by Rich Hickey on Persistent Data Structures and Managed References.

In it he mentions that Clojure's software transactional memory (STM) doesn't do read tracking.

That caught my attention. Read tracking (and validation) is one of the big hassles in multi-version concurrency like in Suneido's database.

I thought maybe there was a way to avoid it that I'd missed so I did some digging. Sadly, what I discovered is that Clojure's STM only implements snapshot isolation (SI).

This means you can still get "write skew" anomalies where multiple concurrent update transactions each write data that the other reads, leading to results that would not (could not) happen if the transactions were serialized.

Suneido implements serializable transactions, not just snapshot isolation, to prevent these kinds of anomalies. (I like how they call it "anomalies", it doesn't sound as bad as "errors".)

Clojure implements snapshot isolation because it's simpler, easier, faster, etc.

Databases like Oracle and PostgreSQL supply snapshot isolation when you request serializable, again, for performance reasons. Amusingly, PostgreSQL says "Serializable mode does not guarantee serializable execution..."

It reminds me of the old saying "if it doesn't have to work correctly, you can make it as small/fast/easy as you want".

But while I was digging into this, I found that there is a way to make snapshot isolation serializable using commit ordering. Wow, if there is a way to avoid read tracking/validation and still be serializable that seems like the best of both worlds.

I found a paper on Serializable Isolation for Snapshot Databases but if I understand it correctly from a quick read, it simply replaces read tracking with a new kind of read lock. I have to study it a bit more to figure out the advantage. I think it may keep the special read locks for a shorter period of time than read tracking. And I think it will detect potential anomalies earlier, avoiding the read validation phase at commit time.

But this paper doesn't seem to have anything to do with commit ordering so I'm unsure if that's yet another approach or whether I'm just missing the connection.

Sometimes I think my father was right, that I should have become an academic so I could spend all my time on this kind of thing. But I think that's a fantasy. First there's all the politics in academia (that I would hate). Then there's the need to specialize so much (whereas I like to jump around). And finally, I like to have a practical end to what I'm doing - actual real users benefiting, not just a paper published in some journal to be read by other academics.

* Building the Perfect Beast is a Don Henley song

In it he mentions that Clojure's software transactional memory (STM) doesn't do read tracking.

That caught my attention. Read tracking (and validation) is one of the big hassles in multi-version concurrency like in Suneido's database.

I thought maybe there was a way to avoid it that I'd missed so I did some digging. Sadly, what I discovered is that Clojure's STM only implements snapshot isolation (SI).

This means you can still get "write skew" anomalies where multiple concurrent update transactions each write data that the other reads, leading to results that would not (could not) happen if the transactions were serialized.

Suneido implements serializable transactions, not just snapshot isolation, to prevent these kinds of anomalies. (I like how they call it "anomalies", it doesn't sound as bad as "errors".)

Clojure implements snapshot isolation because it's simpler, easier, faster, etc.

Databases like Oracle and PostgreSQL supply snapshot isolation when you request serializable, again, for performance reasons. Amusingly, PostgreSQL says "Serializable mode does not guarantee serializable execution..."

It reminds me of the old saying "if it doesn't have to work correctly, you can make it as small/fast/easy as you want".

But while I was digging into this, I found that there is a way to make snapshot isolation serializable using commit ordering. Wow, if there is a way to avoid read tracking/validation and still be serializable that seems like the best of both worlds.

I found a paper on Serializable Isolation for Snapshot Databases but if I understand it correctly from a quick read, it simply replaces read tracking with a new kind of read lock. I have to study it a bit more to figure out the advantage. I think it may keep the special read locks for a shorter period of time than read tracking. And I think it will detect potential anomalies earlier, avoiding the read validation phase at commit time.

But this paper doesn't seem to have anything to do with commit ordering so I'm unsure if that's yet another approach or whether I'm just missing the connection.

Sometimes I think my father was right, that I should have become an academic so I could spend all my time on this kind of thing. But I think that's a fantasy. First there's all the politics in academia (that I would hate). Then there's the need to specialize so much (whereas I like to jump around). And finally, I like to have a practical end to what I'm doing - actual real users benefiting, not just a paper published in some journal to be read by other academics.

* Building the Perfect Beast is a Don Henley song

Snow Leopard Technology

Mac OS X 10.6 Snow Leopard: the Ars Technica review - Ars Technica

There's some good stuff in this article - 64 bit, LLVM, Clang, concurrency, GCD, etc.

Here's a quote I can relate to.

There's some good stuff in this article - 64 bit, LLVM, Clang, concurrency, GCD, etc.

Here's a quote I can relate to.

"The prospect of an automated way to discover bugs that may have existed for years in the depths of a huge codebase is almost pornographic to developers—platform owners in particular."

Saturday, October 03, 2009

Write Your Own Compiler

I recently listened to a podcast of Scott Hanselman talking to Joel Spolsky.

One of the things they talked about was how Fog Creek (Spolsky's company) wrote their own compiler because it was easier than rewriting their application, which was written in an ancient version of VBScript.

I've always been a little defensive about how my company writes their applications in our own language (Suneido). At best people look at me funny, at worst they think I'm crazy. Our salesmen have to skirt around the issue because it scares people to hear you're not using big name tools. As the old saying goes, "no one gets fired for buying IBM". Nowadays you can substitute Microsoft or Oracle or Java.

So it was heartening to hear Spolsky talk about (aka defend) why they wrote their own compiler. One of his points was that writing a compiler is not that hard. People tend to think it's a big deal, but for a smallish language it's not that big a job. For example, Suneido's compiler makes up a tiny fraction (less than 1%) of the total source code in my company's main application.

I'm the first to admit that part of the reason is simply that I like developing languages (and database servers, and IDE's, and frameworks) better than I like writing applications.

But there are other reasons. Having your own platform has its costs, but it also has its benefits. It's a good way to insulate yourself from the fast pace of change in the computer industry. My company still has customers running software that we originally wrote on MS-DOS. The exact same application code is now running on Vista. There are not many commercial platforms that can claim that. Even things like Java that are supposed to be stable platforms change a lot over the years. And Microsoft changes languages and frameworks faster than you want to keep up with. (Spolsky's problem.)

Of course, that can mean you're not using the latest bleeding edge technology, but that's not such a bad thing for a business application.

One of the things they talked about was how Fog Creek (Spolsky's company) wrote their own compiler because it was easier than rewriting their application, which was written in an ancient version of VBScript.

I've always been a little defensive about how my company writes their applications in our own language (Suneido). At best people look at me funny, at worst they think I'm crazy. Our salesmen have to skirt around the issue because it scares people to hear you're not using big name tools. As the old saying goes, "no one gets fired for buying IBM". Nowadays you can substitute Microsoft or Oracle or Java.

So it was heartening to hear Spolsky talk about (aka defend) why they wrote their own compiler. One of his points was that writing a compiler is not that hard. People tend to think it's a big deal, but for a smallish language it's not that big a job. For example, Suneido's compiler makes up a tiny fraction (less than 1%) of the total source code in my company's main application.

I'm the first to admit that part of the reason is simply that I like developing languages (and database servers, and IDE's, and frameworks) better than I like writing applications.

But there are other reasons. Having your own platform has its costs, but it also has its benefits. It's a good way to insulate yourself from the fast pace of change in the computer industry. My company still has customers running software that we originally wrote on MS-DOS. The exact same application code is now running on Vista. There are not many commercial platforms that can claim that. Even things like Java that are supposed to be stable platforms change a lot over the years. And Microsoft changes languages and frameworks faster than you want to keep up with. (Spolsky's problem.)